Review of Introduction to psycholinguistics, Understanding Language Science by MJ Traxler

Overall an interesting introduction. Some chapters were much more interesting to me than others, which were somewhere between kinda boring and boring. Generally, the book is way too light on the statistical features of the studies cited. When I hear of a purportedly great study, I want to know the sample size and the significance of the results.

http://gen.lib.rus.ec/book/index.php?md5=2f8ef6d7091552204272a754eca2d7dc&open=0

---

Why does Pirahã lack recursion? Everett’s (2008) answer is that Pirahã lacks recursion

because recursion introduces statements into a language that do not make direct assertions

about the world. When you say, Give me the nails that Dan bought, that statement presupposes

that it is true that Dan bought the nails, but it does not say so outright. In Pirahã, each of the

individual sentences is a direct statement or assertion about the world. “Give me the nails”

is a command equivalent to “I want the nails” (an assertion about the speaker’s mental state).

“Dan bought the nails” is a direct assertion of fact, again expressing the speaker’s mental

state (“I know Dan bought those nails”). “They are the same” is a further statement of fact.

Everett describes the Pirahã as being a very literal-minded people. They have no creation

myths. They do not tell fictional stories. They do not believe assertions made by others

about past events unless the speaker has direct knowledge of the events, or knows someone

who does. As a result, they are very resistant to conversion to Christianity, or any other faith

that requires belief in things unseen. Everett argues that these cultural principles determine

the form of Pirahã grammar. Specifically, because the Pirahã place great store in first-hand

knowledge, sentences in the language must be assertions. Nested statements, like relative

clauses, require presuppositions (rather than assertions) and are therefore ruled out. If

Everett is right about this, then Pirahã grammar is shaped by Pirahã culture. The form their

language takes is shaped by their cultural values and the way they relate to one another

socially. If this is so, then Everett’s study of Pirahã grammar would overturn much of the

received wisdom on where grammars come from and why they take the form they do.

Which leads us to …

Interesting hypothesis.

-

In an attempt to gather further evidence regarding these possibilities, Savage-Rumbaugh

raised a chimp named Panpanzeeand a bonobo named Panbanisha, starting when they

were infants, in a language-rich environment. Chimpanzees are the closest species to

humans. The last common ancestor of humans and chimpanzees lived between about

5 million and 8 million years ago. Bonobos are physically similar to chimpanzees, although

bonobos are a bit smaller on average. Bonobos asa group also have social characteristics

that distinguish them from chimpanzees. Theytend to show less intra-species aggression

and are less dominated by male members of the species.9Despite the physical similarities,

the two species are biologically distinct. By testing both a chimpanzee and a bonobo, SavageRumbaugh could hold environmental factors constant while observing change over time

(ontogeny) and differences across the two species (phylogeny). If the two animals acquired

the same degree of language skill, this would suggest that cultural or environmental factors

have the greatest influence on their language development. Differences between them

would most likely reflect phylogenetic biological differences between the two species.

Differences in skill over time would most likely reflect ontogenetic or maturational factors.

The author clearly forgets about individual differences in ability. They are also found in monkeys (indeed, any animal which has g as a polygenetic trait).

-

The fossil record shows that human ancestors before Homo sapiensemerged, between

about 70,000 and 200,000 years ago, had some of the cultural and physical characteristics of

modern humans, including making tools and cooking food. If we assume that modern

language emerged sometime during the Homo sapiensera, then it would be nice to know

why it emerged then, and not before. One possibility is that a general increase in brain size

relative to body weight in Homo sapiensled to an increase in general intelligence, and this

increase in general intelligence triggered a language revolution. On this account, big brain

comes first and language emerges later. This hypothesis leaves a number of questions

unanswered, however, such as, what was that big brain doing before language emerged? If

the answer is “not that much,” then why was large brain size maintained in the species

(especially when you consider that the brain demands a huge proportion of the body’s

resources)? And if language is an optional feature of big, sapiensbrains, why is it a universal

characteristic among all living humans? Also, why do some groups of humans who have

smaller sized brains nonetheless have fully developed language abilities?

Interesting that he doesn't cite a reference for this claim, or the brain size general intelligence claim from earlier. I'm more wondering, tho, is there really no difference in language sofistication between groups with different brain sizes (and g)? I'm thinking of spoken language. Perhaps it's time to revise that claim. We know that g has huge effects on people's vocabulary size (vocabulary size is one of the most g-loaded subtests) which has to do with both spoken and written language. However, the grammers and morfologies of many languages found in low-g countries are indeed very sofisticated.

-

As a result of concerns like those raised by Pullum, as well as studies showing that

speakers of different languages perceive the world similarly, many language scientists have

viewed linguistic determinism as being dead on arrival (see, e.g., Pinker, 1994). Many of

them would argue that language serves thought, rather than dictating to it. If we ask the

question, what is language good for? one of the most obvious answers is that language

allows us to communicate our thoughts to other people. That being the case, we would

expect language to adapt to the needs of thought, rather than the other way around. If an

individual or a culture discovers something new to say, the language will expand to fit the

new idea (as opposed to preventing the new idea from being hatched, as the Whorfian

hypothesis suggests). This anti-Whorfian position does enjoy a certain degree of support

from the vocabularies of different languages, and different subcultures within individual

languages. For example, the class of words that refer to objects and events (open class)

changes rapidly in cultures where there is rapid technological or social changes (such as

most Western cultures). The word internetdid not exist when I was in college, mumble

mumble years ago. The word Googledid not exist 10 years ago. When it first came into the

language, it was a noun referring to a particular web-browser. Soon after, it became a verb

that meant “to search the internet for information.” In this case, technological, cultural, and

social developments caused the language to change. Thought drove language. But did

language also drive thought? Certainly. If you hear people saying “Google,” you are going to

want to know what they mean. You are likely to engage with other speakers of your language

until this new concept becomes clear to you. Members of subcultures, such as birdwatchers

or dog breeders, have many specialist terms that make their communication more efficient,

but there is no reason to believe that you need to know the names for different types of birds

before you can perceive the differences between them—a bufflehead looks different than a

pintail no matter what they’re called.

The author apparently gets his etymology wrong. Google was never a verb for a browser, that's something computer-illiterate people think, cf. https://www.youtube.com/watch?v=o4MwTvtyrUQ

No... Google's Chrome is a browser. Google is a search engine. And “to google” something means to search for it using Google, the search engine. Altho now it has changed somewhat to mean “search the internet for”. Similarly to the Kleenex meaning change.

-

Different languages express numbers in different ways, so language could influence the

way children in a given culture acquire number concepts (Hunt & Agnoli, 1991; Miller &

Stigler, 1987). Chinese number words differ from English and some other languages (e.g.,

Russian) because the number words for 11–19 are more transparent in Chinese than in

English. In particular, Chinese number words for the teens are the equivalent of “ten-one,”

“ten-two,” “ten-three” and so forth. This makes the relationship between the teens and the

single digits more obvious than equivalent English terms, such as twelve. As a result, children

who speak Chinese learn to count through the teens faster than children who speak English.

This greater accuracy at producing number words leads to greater accuracy when children

are given sets of objects and are asked to say how many objects are in the set. Chinesespeaking children performed this task more accurately than their English-speaking peers,

largely because they made very few errors in producing number words while counting up

the objects. One way to interpret these results is to propose that the Chinese language makes

certain relationships more obvious (that numbers come in groups of ten; that there’s a

relationship between different numbers that end in the word “one”), and making those

relationships more obvious makes the counting system easier to learn.22

This hypothesis is certainly plausible, but the average g difference between chinese children and american children is a confound that needs to be dealt with.

It would be interesting to see a scandinavian comparison because danish has a horrible numeral system, while swedish has a better one. They both have awkward teen numbers, but the e2 numbers are much more meaningful in swedish: e.g. fem-tio, five-ten vs. halv-tres, half-tres, a remnant from a system based on 20's, it's half-three*20 = 2.5*20=50.

-

So how are word meanings (senses, that is) represented in the mental lexicon? And what

research tools are appropriate to investigating word representations? One approach to

investigating word meaning relies on introspection—thinking about word meanings and

drawing conclusions from subjective experience. It seems plausible, based on introspection,

that entries in the mental lexicon are close analogs to dictionary entries. If so, the lexical

representation of a given word would incorporate information about its grammatical

function (what category does it belong to, verb, noun, adjective, etc.), which determines how

it can combine with other words (adverbs go with verbs, adjectives with nouns). Using

words in this sense involves the assumption that individual words refer to types—that the

core meaning of a word is a pointer to a completely interchangeable set of objects in the

world (Gabora, Rosch, & Aerts, 2008). Each individual example of a category is a token. So,

teamis a type, and Yankees, Twins, and Mudhensare tokens of that type.2

He has misunderstood the type-token terminology. I will quite SEP:

http://plato.stanford.edu/entries/types-tokens/#DisBetTypTok

1.1 What the Distinction Is

The distinction between a type and its tokens is an ontological one between a general sort of thing and its particular concrete instances (to put it in an intuitive and preliminary way). So for example consider the number of words in the Gertrude Stein line from her poem Sacred Emily on the page in front of the reader's eyes:

Rose is a rose is a rose is a rose.

In one sense of ‘word’ we may count three different words; in another sense we may count ten different words. C. S. Peirce (1931-58, sec. 4.537) called words in the first sense “types” and words in the second sense “tokens”. Types are generally said to be abstract and unique; tokens are concrete particulars, composed of ink, pixels of light (or the suitably circumscribed lack thereof) on a computer screen, electronic strings of dots and dashes, smoke signals, hand signals, sound waves, etc. A study of the ratio of written types to spoken types found that there are twice as many word types in written Swedish as in spoken Swedish (Allwood, 1998). If a pediatrician asks how many words the toddler has uttered and is told “three hundred”, she might well enquire “word types or word tokens?” because the former answer indicates a prodigy. A headline that reads “From the Andes to Epcot, the Adventures of an 8,000 year old Bean” might elicit “Is that a bean type or a bean token?”.

He seems to be talking about members of sets.

Or maybe not, perhaps his usage is just idiosyncratic, for in the note “2” to the above, he writes:

Teamitself can be a token of a more general category, like organization(team, company, army). Typeand token

are used differently in the speech production literature. There, tokenis often used to refer to a single instance

of a spoken word; typeis used to refer to the abstract representation of the word that presumably comes into

play every time an individual produces that word

-

We could lo ok at a cor pus and count up ever y t ime t he word dogsappears in exactly that

form. We could count up the number of times that catsappears in precisely that form. In

that case we would be measuring surface frequency—how often the exact word occurs. But

the words dogsand catsare both related to other words that share the same root morpheme.

We could decide t o ignore minor differences in s ur face for m and ins t ead concent r at e on

how often the family of related words appears. If so, we would treat dog, dogs, dog-tired, and

dogpileas being a single large class, and we would count up the number of times any member

of the class appears in the corpus. In that case, we would be measuring rootfrequency—how

often the shared word root appears in the language. Those two ways of counting frequency

can come up with very different estimates. For example, perhaps the exact word dogappears

very often, but do-pileappears very infrequently. If we base our frequency estimate on

surface frequency, dogpileis very infrequent. But if we use root frequency instead, dogpileis

very frequent, because it is in the class of words that share the root dog, which appears

fairly often.

If we use these different frequency estimates (surface frequency and root frequency) to

predict how long it will take people to respond on a reaction time task, root frequency

makes better predictions than surface frequency does. A word that has a low surface

frequency will be responded to quickly if its root frequency is high (Bradley, 1979; Taft, 1979,

1994). This outcome is predicted by an account like FOBS that says that word forms are

accessed via their roots, and not by models like logogen where each individual word form

has a separate entry in the mental lexicon.

Further evidence for the morphological decomposition hypothesis comes from priming

studies involving words with real and pseudo-affixes. Many polymorphemic words are

created when derivational affixes are added to a root. So, we can take the verb growand

turn it into a noun by adding the derivational suffix -er. A groweris someone who grows

things. There are a lot of words that end in -erand have a similar syllabic structure to

grower, but that are not real polymorphemic words. For example, sisterlooks a bit like

grower. They both end in -erand they both have a single syllable that precedes -er.

According to the FOBS model, we have to get rid of the affixes before we can identify the

root. So, anything that looks or sounds like it has a suffix is going to be treated like it really

does have a suffix, even when it doesn’t. Even though sisteris a monomorphemic word, the

lexical access process breaks it down into a pseudo- (fake) root, sist, and a pseudo-suffix, -er.

After the affix strippingprocess has had a turn at breaking down sisterinto a root and a

suffix, the lexical access system will try to find a bin that matches the pseudo-root sist. This

process will fail, because there is no root morpheme in English that matches the input sist.

In that case, the lexical access system will have to re-search the lexicon using the entire

word sister. This extra process should take extra time, therefore the affix stripping

hypothesis predicts that pseudo-suffixedwords (like sister) should take longer to process

than words that have a real suffix (like grower). This prediction has been confirmed in a

number of reaction time studies—people do have a harder time recognizing pseudosuffixed words than words with real suffixes (Lima, 1987; Smith & Sterling, 1982; Taft,

1981). People also have more trouble rejecting pseudo-words that are made up of a prefix

(e.g., de) and a real root morpheme (e.g., juvenate) than a comparable pseudo-word that

contains a prefix and a non-root (e.g., pertoire). This suggests that morphological

decomposition successfully accesses a bin in the dejuvenatecase, and people are able to

rule out dejuvenateas a real word only after the entire bin has been fully searched (Taft &

Forster, 1975). Morphological structure may also play a role in word learning. When people

are exposed to novel words that are made up of real morphemes, such as genvive(related

to the morpheme vive, as in revive) they rate that stimulus as being a better English word

and they recognize it better than an equally complex stimulus that does not incorporate a

familiar root (such as gencule) (Dorfman, 1994, 1999).

In which case english is a pretty bad language as it tends not to re-use roots. Ex. “garlic” vs. danish “hvidløg” (white-onion), or “edible” vs. “eatable” (eat-able). Esparanto shud do pretty good on a comparison.

-

When comprehenders demonstrate sensitivity to subcategory preference information

(the fact that some structures are easier to process than others when a sentence contains a

particular verb), they are behaving in ways that are consistent with the tuning hypothesis.

The tuning hypothesis says, “that structural ambiguities are resolved on the basis of stored

records relating to the prevalence of the resolution of comparable ambiguities in the past”

(Mitchell, Cuetos, Corley, & Brysbaert, 1995, p. 470; see also Bates & MacWhinney, 1987;

Ford, Bresnan, & Kaplan, 1982; MacDonald et al., 1994). In other words, people keep track

of how often they encounter different syntactic structures, and when they are uncertain

about how a particular string of words should be structured, they use this stored information

to rank the different possibilities. In the case of subcategory preference information, the

frequencies of different structures are tied to specific words—verbs in this case. The next

section will consider the possibility that frequencies are tied to more complicated

configurations of words, rather than to individual words.

This seems like a plausible account of why practicing can boost reading speed.

-

The other way that propositionis defined in construction–integration theory is, “The

smallest unit of meaning that can be assigned a truth value.” Anything smaller than that is

a predicate or an argument. Anything bigger than that is a macroproposition. So, wroteis a

predicate, and wrote the companyis a predicate and one of its arguments. Neither is

a proposition, because neither can be assigned a truth value. That is, it doesn’t make sense

to ask, “True or false: wrote the company?” But it does make sense to ask, “True or false: The

customer wrote the company?” To answer that question, you would consult some

representation of the real or an imaginary world, and the statement would either accurately

describe the state of affairs in that world (i.e., it would be true) or it would not (i.e., it would

be false).

Although the precise mental mechanisms that are involved in converting the surface

form to a set of propositions have not been worked out, and there is considerable debate

about the specifics of propositional representation (see, e.g., Kintsch, 1998; Perfetti & Britt,

1995), a number of experimental studies have supported the idea that propositions are a

real element of comprehenders’ mental representations of texts (van Dijk & Kintsch, 1983).

In other words, propositions are psychologically real—there really are propositions in the

head. For example, Ratcliff and McKoon (1978) used priming methods to find out how

comprehenders’ memories for texts are organized. There are a number of possibilities. It

could be that comprehenders’ memories are organized to capture pretty much the verbatim

information that the text conveyed. In that case, we would expect that information that is

nearby in the verbatim form of the text would be very tightly connected in the comprehender’s

memory of that text. So, for example, if you had a sentence like (2) (from Ratcliff & McKoon,

1978)

(2) The geese crossed the horizon as the wind shuffled the clouds.

the words horizonand windare pretty close together, as they are separated by only two short

function words. If the comprehender’s memory of the sentence is based on remembering it

as it appeared on the page, then horizonshould be a pretty good retrieval cue for wind(and

vice versa).

If we analyze sentence (2) as a set of propositions, however, we would make a different

prediction. Sentence (2) represents two connected propositions, because there are two

predicates, crossedand shuffled. If we built a propositional representation of sentence (2),

we would have a macroproposition(a proposition that is itself made up of other propositions),

and two micropropositions(propositions that combine to make up macropropositions). The

macroproposition is:

as (Proposition 1, Proposition 2)

The micropropositions are:

Proposition 1: crossed [geese, the horizon]

Proposition 2: shuffled [the wind, the clouds]

Notice that the propositional representation of sentence (2) has horizonin one proposition,

and windin another. According to construction–integration theory, all of the elements of

that go together to make a proposition should be more tightly connected in memory to each

other than to anything else in the sentence. As a result, two words from the same proposition

should make better retrieval cues than two words from different propositions. Those

predictions can be tested by asking subjects to read sentences like (2), do a distractor task

for a while, and then write down what they can remember about the sentences later on. On

each trial, one of the words from the sentence will be used as a retrieval cue or reminder. So,

before we ask the subject to remember sentence (2), we will give her a hint. The hint

(retrieval cue) might be a word from proposition 1 (like horizon) or a word from proposition

2 (like clouds), and the dependent measure would be the likelihood that the participant will

remember a word from the second proposition (like wind). Roger Ratcliff and Gail McKoon

found that words that came from the same proposition were much better retrieval cues

(participants were more likely to remember the target word) than words from different

propositions, even when distance in the verbatim form was controlled. In other words, it

does not help that much to be close to the target word in the verbatim form of the sentence

unless the reminder word is also from the same proposition as the target word (see also

Wanner, 1975; Weisberg, 1969).

Im surprised to see empirical evidence for this, but it is very neat when science does that - converge on the same result from two different angles (in this case metafysics and linguistics).

As for his micro, macroproposition terminology, normally logicians call these compound/non-atomic and atomic propositions.

-

How does suppression work? Is it as automatic as enhancement? There are a number of

reasons to think that suppression is not just a mirror image of enhancement. First,

suppression takes a lot longer to work than enhancement does. Second, while knowledge

activation (enhancement) occurs about the same way for everyone, not everyone is equally

good at suppressing irrelevant information, and this appears to be a major contributor to

differences in comprehension ability between different people (Gernsbacher, 1993;

Gernsbacher & Faust, 1991; Gernsbacher et al., 1990). For example, Gernsbacher and her

colleagues acquired Verbal SAT scores for a large sample of students at the University of

Oregon (similar experiments have been done on Air Force recruits in basic training, who

are about the same age as the college students). Verbal SAT scores give a pretty good

indication of how well people are able to understand texts that they read, and there are

considerable differences between the highest and lowest scoring people in the sample. This

group of students was then asked to judge whether target words like acewere semantically

related to a preceding sentence like (15), above. Figure 5.4 presents representative data from

one of these experiments. The left-hand bars show that the acemeaning was highly activated

for both good comprehenders (the dark bars) and poorer comprehenders (the light bars)

immediately after the sentence. After a delay of one second (a very long time in language

processing terms), the good comprehenders had suppressed the contextually inappropriate

“playing card” meaning of spade, but the poor comprehenders still had that meaning

activated (shown in the right-hand bars of Figure 5.4).

Very neat! Didn't know about this, but it fits very nicely in the ECT (elementary cognitive test) tradition of Jensen. I shud probably review this evidence and publish a review in Journal of Intelligence.

-

To determine whether something is a cause, comprehenders apply the necessity in the

circumstances heuristic (which is based on the causal analysis of the philosopher Hegel).

The necessity in the circumstances heuristic says that “A causes B, if, in the circumstances

of the story, B would not have occurred if A had not occurred, and if A is sufficient for B to

occur.”

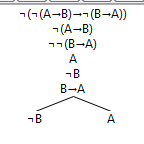

Sounds more like a logical fallacy, i.e. denying the antecedent:

1. A→B 2. ¬A Thus, 3. ¬B

The importance of causal structure in the mental processing of texts can be demonstrated

in a variety of ways. First, the propositional structure of texts can be described as a network

of causal connections. Some of the propositions in a story will be on the central causal chain

that runs from the first proposition in the story (Once upon a time …) to the last (… and

they lived happily ever after). Other propositions will be on causal dead-ends or side-plots.

In Cinderella, her wanting to go to the ball, the arrival of the fairy godmother, the loss of the

212

Discourse Processing

glass slipper, and the eventual marriage to the handsome prince, are all on the central causal

chain. Many of the versions of the Cinderella story do not bother to say what happens to the

evil stepmother and stepsisters after Cinderella gets married. Those events are off the

central causal chain and, no matter how they are resolved, they do not affect the central

causal chain. As a result, if non-central events are explicitly included in the story, they are

not remembered as well as more causally central elements (Fletcher, 1986; Fletcher &

Bloom, 1988; Fletcher et al., 1990).

A nice model for memetic evolution.

-

Korean Air had a big problem (Kirk, 2002). Their planes were dropping out of

the sky like ducks during hunting season. They had the worst safety record of

any major airline. Worried company executives ordered a top-to-bottom

review of company policies and practices to find out what was causing all the

crashes. An obvious culprit would be faulty aircraft or bad maintenace

practices. But their review showed that Korean Air’s aircraft were well

maintained and mechanically sound. So what was the problem? It turned out

that the way members of the flight crew talked to one another was a major

contributing factor in several air disasters. As with many airlines, Korean Air

co-pilots were generally junior to the pilots they flew with. Co-pilots’

responsibilities included, among other things, helping the pilot monitor the

flight instruments and communicating with the pilot when a problem occurred,

including when the pilot might be making an error flying the plane. But in the

wider Korean culture, younger people treat older people with great deference

and respect, and this social norm influences the way younger and older people

talk to one another. Younger people tend to defer to older people and feel

uncomfortable challenging their judgment or pointing out when they are

about to fly a jet into the side of a mountain. In the air, co-pilots were waiting

too long to point out pilot errors, and when they did voice their concerns, their

communication style, influenced by a lifetime of cultural conditioning, made it

more difficult for pilots to realize when something was seriously wrong. To

correct this problem, pilots and co-pilots had to re-learn how to talk to one

another. Pilots needed to learn to pay closer attention when co-pilots voiced

their opinions, and co-pilots had to learn to be more direct and assertive when

communicating with pilots. After instituting these and other changes, Korean

Air’s safety record improved and they stopped losing planes.

This one is probably not true. No source given either. Perhaps just a case of statistical regression towards the mean. Any airline that does bad for chance reasons will tend to recover.

-

To date, exp er iment s on s t at is t ical lear ning in infant s have b een bas ed on highly

simplified mini-languages with very rigid statistical properties. For example, transitional

probabilities between syllables are set to 1.0 for “words” in the language, and .33 for pairs of

syllables that cut across “word” boundaries.17Natural languages have a much wider range of

transitional probabilities between syllables, the vast majority of which are far lower than

1.0. Researchers have used mathematical models to simulate learning of natural languages,

using samples of real infant-directed speech to train the simulated learner (Yang, 2004).

When the model has to rely on transitional probabilities alone, it fails to segment speech

accurately. However, when the model makes two simple assumptions about prosody—that

each word has a single stressed syllable, and that the prevailing pattern for bisyllables is

trochaic (STRONG–weak)—the model is about as accurate in its segmentation decisions as

7½-month-old infants. This result casts doubt on whether the statistical learning strategy is

sufficient for infants to learn how to segment naturally occurring speech (and if the strategy

is not sufficient, it can not be necessary either).

More logic errors? Let's translate the talk of sufficient and necessary conditions into logic:

A is a sufficient condition for B, is the same as, A→B

A is a necessary condition for B, is the same as, B→A

The claim that A is not sufficient for B, then A is not necessary for B, is thus the same as: ¬(A→B)→¬(B→A). Clearly not true.

-

So, if a child already knows the name of a concept, she will reject a second label as referring

to the same concept. Children can use this principle to figure out the meanings of new

words, because applying the principle of contrast rules out possible meanings. If you

already know that gavagaimeans “rabbit,” and your guide points at a rabbit and says, blicket,

you will not assume that gavagaiand blicketare synonyms. Instead, you will consider the

possibility that blicketrefers to a salient part of the rabbit (its ears, perhaps) or a type of

rabbit or some other salient property of rabbits (that they’re cute, maybe). In the lab,

children who are taught two new names while attending to an unfamiliar object interpret

the first name as referring to the entire object and the second name as referring to a salient

part of the object. For somewhat older children (3–4 years old), parents often provide an

explicit contrast when introducing children to new words that label parts of an object

(Saylor, Sabbagh, & Baldwin, 2002). So, an adult might point to Flopsy and say, See

the bunny? These are his ears. Children do not need such explicit instruction, however,

as they appear to spontaneously apply the principle of contrast to deduce meanings for

subcomponents of objects (e.g., ears) and substances that objects are made out of (e.g.,

wood, naugahyde, duck tape).

Dogs (some of them) apparently can also do this. http://www.sciencemag.org/content/304/5677/1682.short

-

When Chinese was thought of as a pictographic script, it made sense to think that

Chinese script might be processed much differently than English script. But it turns out

that there are many similarities in how the two scripts are processed. For one thing, reading

both scripts leads to the rapid and automatic activation of phonological (sound) codes.

When we read English, we use groups of letters to activate phonological codes automatically

(this is one of the sources of the inner voicethat you often hear when you read). The fact

that phonological codes are automatically activated in English reading is shown by

experiments involving semantic categorization tasks where people have to judge whether a

word is a member of a category. Heterophonic(multiple pronunciations) homographs(one

spelling), such as wind, take longer to read than comparably long and frequent regular

words, because reading windactivates two phonological representations (as in the wind was

blowingvs. wind up the clock) (Folk & Morris, 1995). A related consistency effect involves

words that have spelling patterns that have multiple pronunciations. The word havecontains

the letter “a,” which in this case is pronounced as a “short” /a/ sound. But most of the time

-aveis pronounced with the “long” a sound, as in cave, and save. So, the words have, cave,

and save, are said to be inconsistentbecause the same string of letters can have multiple

pronunciations. Words of this type take longer to read than words that have entirely

consistent letter–pronunciation patterns (Glushko, 1979), and the extra reading time

reflects the costs associated with selecting the correct phonological code from a number of

automatically activated candidates.

Some potential good reasons for a 'shallow' (i.e. good) orthografy here! A bad spelling system is literally causing us to take longer to read, not just to learn to read.

-

Phonemic awareness is an important precursor of literacy (the ability to read and write).

It is thought to play a causal role in reading success, because differences in phonemic

awareness can be measured in children who have not yet begun to read. Those prereaders’

phonemic awareness test scores then predict how successfully and how quickly they will

master reading skills two or three years down the line when they begin to read (Torgesen

et al., 1999, 2001; Wagner & Torgesen, 1987; Wagner, Torgesen, & Rashotte, 1994;

Wagner et al., 1997; see Wagner, Piasta, & Torgesen, 2006, for a review; but see Castles &

Coltheart, 2004, for a different perspective). Phonemic awareness can be assessed in a

variety of ways, including the elision, sound categorization, and blendingtasks (Torgesen

et al., 1999), among others, but the best assessments of phonemic awareness involve multiple

measures. In the elision task, children are given a word such as catand asked what it would

sound like if you got rid of the /k/sound. Sound categorization involves listening to sets of

words, such as pin, bun, fun, and gun, and identifying the word “that does not sound like the

others” (in this case, pin; Torgesen et al., 1999, p. 76). In blending tasks, children hear an

onset (word beginning) and a rime (vowel and consonant sound at the end of a syllable),

and say what they would sound like when they are put together. Children’s composite scores

on tests of phonemic awareness are strongly correlated with the development of reading

skill at later points in time. Children who are less phonemically aware will experience

greater difficulty learning to read, but effective interventions have been developed to

enhance children’s phonemic awareness, and hence toincrease the likelihood that they will

acquire reading skill within the normal time frame (Ehri, Nunes, Willows, et al., 2001).18

These shud work as early IQ tests.

And it does (even if this is a weak paper): http://www.psy.cuhk.edu.hk/psy_media/Cammie_files/016.correlates%20of%20phonological%20awareness%20implications%20for%20gifted%20education.pdf

-

There are different kinds of neighborhoods, and the kind of neighborhood a word

inhabits affects how easy it is to read that word. Different orthographic neighborhoods are

described as being consistent or inconsistent, based on how the different words in the

neighborhood are pronounced. If they are all pronounced alike, then the neighborhood is

consistent. If some words in the neighborhood are pronounced one way, and others are

pronounced another way, then the neighborhoodis inconsistent. The neighborhood that

madeinhabits is consistent, because all of the other members of the neighborhood (wade,

fade, etc.) are pronounced with the long /a/ sound. On the other hand, hint lives in an

inconsistent neighborhood because some of the neighbors are pronounced with the short

/i/ sound (mint, lint, tint), but some are pronounced with the long /i/ sound (pint). Words

from inconsistent neighborhoods take longer to pronounce than words from consistent

neighborhoods, and this effect extends to non-words as well (Glushko, 1979; see also Jared,

McRae, & Seidenberg, 1990; Seidenberg, Plaut, Petersen, McClelland, & McRae, 1994). So,

it takes you less time to say tadethan it takes you to say bint. Why would this be?

Bad spelling even makes us speak slower...

-

The single-route models would seem to enjoy a parsimony advantage, since they can

produce frequency and regularity effects, as well as their interaction, on the basis of a single

mechanism.25However, recent studies have indicated that the exact position in a word that

leads to inconsistent spelling–sound mappings affects how quickly the word can be read

aloud. As noted above, it takes longer to read a word with an inconsistency at the beginning

(e.g., general, where hard /g/ as in goatis more common) than a word with an inconsistency

at the end (e.g., bomb, where the bis silent). This may be more consistent with the DRC

serial mapping of letters to sounds than the parallel activation posited by PDP-style singleroute models (Coltheart & Rastle, 1994; Cortese, 1998; Rastle & Coltheart, 1999b; Roberts,

Rastle, Coltheart, & Besner, 2003).

In practical terms, this means that we shud begin with words that have problematic beginnings and endings. Words like “mnemonic” and “psychology”.

-

Treat ment opt ions for aphasia include pharmacological t herapy (dr ugs) and various

forms of speech therapy.18Let’s review pharmacological therapy before turning to speech

therapy. One of the main problems that happens following strokes is that damage to the

blood vessels in the brain reduces the blood flow to perisylvian brain regions, and

hypometabolism—less than normal activity—in those regions likely contributes to aphasic

symptoms. Therefore, some pharmacological treatments focus on increasing the blood

supply to the brain, and those treatments have been shown to be effective in some studies

(Kessler, Thiel, Karbe, & Heiss, 2001). The periodimmediately following the stroke appears

to be critical in terms of intervening to preserve function. For example, aphasia symptoms

can be alleviated by drugs that increase blood pressure if they are administered very rapidly

when the stroke occurs (Wise, Sutter, & Burkholder, 1972). During this period, aphasic

symptoms will reappear if blood pressure is allowed to fall, even if the patient’s blood

pressure is not abnormally low. In later stages of recovery, blood pressure can be reduced

without causing the aphasic symptoms to reappear. Other treatment options capitalize on

the fact that the brain has some ability to reorganize itself following an injury (this ability is

called neural plasticity). It turns out that stimulant drugs, including amphetamines, appear

to magnify or boost brain reorganization. When stimulants are taken in the period

immediately following a stroke, and patients are also given speech-language therapy, their

language function improves more than control patients who receive speech-language

therapy and a placebo in the six months after their strokes (Walker-Batson et al., 2001).

Very interesting application of amfetamins.