Betas and residualized variables / does non-g ability predict GPA?

https://twitter.com/KirkegaardEmil/status/641272758257717248

https://twitter.com/pnin1957/status/641284556818096128

Let's test that. Since we don't have the original data, we can't use that. We can however use open datasets. I like to use Wicherts' dataset. So let's analyze!

library(pacman)

p_load(kirkegaard, psych, stringr)

#load wicherts data

w = read.csv("wicherts_data.csv", sep = ";")

w = subset(w, select = c("ravenscore", "lre_cor", "nse_cor", "voc_cor", "van_cor", "hfi_cor", "ari_cor", "Zgpa"))

#remove missing

w = na.omit(w)

#standardize

w = std_df(w)

#subset the subtests

w_subtests = subset(w, select = c("ravenscore", "lre_cor", "nse_cor", "voc_cor", "van_cor", "hfi_cor", "ari_cor"))

#factor analyze

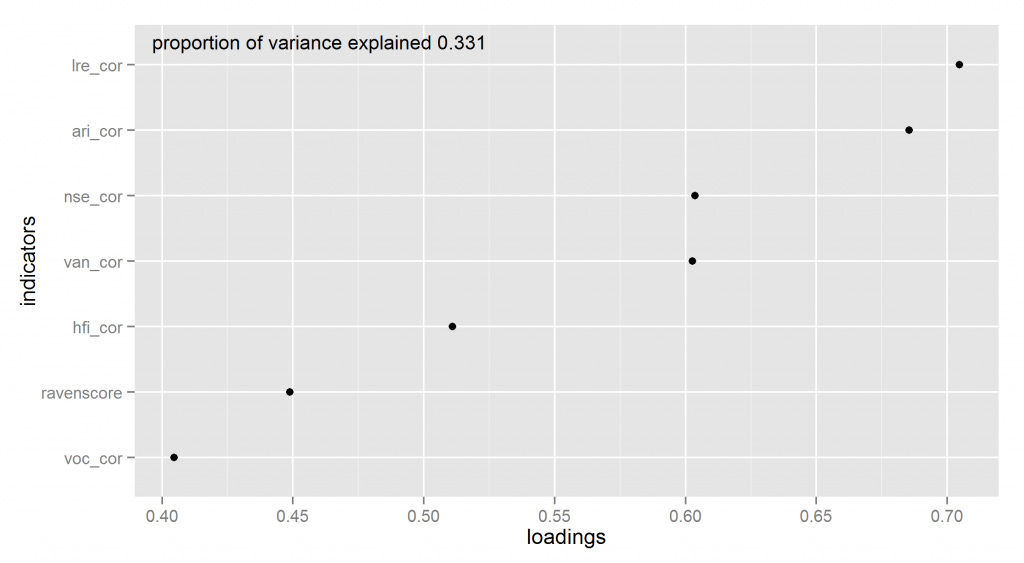

fa = fa(w_subtests)

#plot

plot_loadings(fa) + scale_x_continuous(breaks = seq(0, 1, .05))

#save scores

w_subtests$g = as.numeric(fa$scores)

#residualize

w_res = residualize_DF(w_subtests, "g")

#include GPA

w_res$GPA = w$Zgpa

#cors

write_clipboard(wtd.cors(w_res))

#predict GPA

fits = lm_beta_matrix(dependent = "GPA",

predictors = colnames(w_res)[-9],

data = w_res, return_models = "n")

write_clipboard(fits)

#why does the last model have NA for one variable?

model = str_c("GPA ~ ", str_c(colnames(w_res)[-9], collapse = " + "))

fit = lm(model, w_res)

summary(fit)Results

Correlations ravenscore lre_cor nse_cor voc_cor van_cor hfi_cor ari_cor g GPA ravenscore 1 -0.125 -0.201 -0.115 0.057 0.022 -0.294 0 -0.028 lre_cor -0.125 1 -0.316 -0.143 -0.195 -0.23 -0.288 0 -0.074 nse_cor -0.201 -0.316 1 -0.206 -0.381 -0.103 0.099 0 -0.116 voc_cor -0.115 -0.143 -0.206 1 0.198 -0.141 -0.207 0 0.08 van_cor 0.057 -0.195 -0.381 0.198 1 -0.081 -0.406 0 0.137 hfi_cor 0.022 -0.23 -0.103 -0.141 -0.081 1 -0.233 0 0.037 ari_cor -0.294 -0.288 0.099 -0.207 -0.406 -0.233 1 0 0.007 g 0 0 0 0 0 0 0 1 0.334 GPA -0.028 -0.074 -0.116 0.08 0.137 0.037 0.007 0.334 1

Model fits Model # ravenscore lre_cor nse_cor voc_cor van_cor hfi_cor ari_cor g r2.adj. 1 -0.028 -0.003 2 -0.074 0.002 3 -0.116 0.01 4 0.08 0.003 5 0.137 0.015 6 0.037 -0.002 7 0.007 -0.003 8 0.334 0.108 9 -0.038 -0.079 0 10 -0.054 -0.127 0.009 11 -0.019 0.078 0 12 -0.036 0.139 0.013 13 -0.029 0.038 -0.005 14 -0.029 -0.001 -0.006 15 -0.028 0.334 0.106 16 -0.123 -0.155 0.02 17 -0.064 0.071 0.004 18 -0.049 0.127 0.014 19 -0.069 0.021 -0.001 20 -0.078 -0.015 -0.001 21 -0.074 0.334 0.111 22 -0.104 0.059 0.01 23 -0.075 0.108 0.017 24 -0.114 0.026 0.007 25 -0.118 0.019 0.007 26 -0.116 0.334 0.119 27 0.055 0.126 0.015 28 0.087 0.05 0.002 29 0.086 0.025 0 30 0.08 0.334 0.112 31 0.141 0.049 0.014 32 0.168 0.075 0.017 33 0.137 0.334 0.124 34 0.041 0.017 -0.005 35 0.037 0.334 0.107 36 0.007 0.334 0.105 37 -0.082 -0.14 -0.177 0.023 38 -0.029 -0.068 0.067 0.001 39 -0.043 -0.054 0.129 0.013 40 -0.038 -0.074 0.021 -0.003 41 -0.049 -0.09 -0.033 -0.003 42 -0.038 -0.079 0.334 0.109 43 -0.046 -0.115 0.052 0.008 44 -0.052 -0.086 0.107 0.016 45 -0.054 -0.125 0.026 0.007 46 -0.053 -0.127 0.004 0.006 47 -0.054 -0.127 0.334 0.119 48 -0.03 0.052 0.128 0.012 49 -0.02 0.085 0.05 -0.001 50 -0.013 0.083 0.021 -0.003 51 -0.019 0.078 0.334 0.109 52 -0.038 0.143 0.05 0.012 53 -0.017 0.166 0.07 0.014 54 -0.036 0.139 0.334 0.122 55 -0.027 0.04 0.009 -0.008 56 -0.029 0.038 0.334 0.104 57 -0.029 -0.001 0.334 0.103 58 -0.115 -0.146 0.034 0.018 59 -0.097 -0.119 0.072 0.021 60 -0.125 -0.157 -0.008 0.017 61 -0.127 -0.155 -0.014 0.017 62 -0.123 -0.155 0.334 0.129 63 -0.043 0.051 0.118 0.013 64 -0.055 0.078 0.036 0.001 65 -0.062 0.072 0.004 0 66 -0.064 0.071 0.334 0.113 67 -0.039 0.133 0.039 0.012 68 -0.024 0.158 0.065 0.014 69 -0.049 0.127 0.334 0.123 70 -0.072 0.018 -0.009 -0.005 71 -0.069 0.021 0.334 0.108 72 -0.078 -0.015 0.334 0.108 73 -0.068 0.046 0.102 0.015 74 -0.099 0.065 0.036 0.008 75 -0.106 0.065 0.031 0.007 76 -0.104 0.059 0.334 0.119 77 -0.069 0.114 0.039 0.015 78 -0.07 0.139 0.071 0.017 79 -0.075 0.108 0.334 0.126 80 -0.116 0.031 0.026 0.004 81 -0.114 0.026 0.334 0.116 82 -0.118 0.019 0.334 0.116 83 0.063 0.129 0.057 0.015 84 0.067 0.158 0.085 0.017 85 0.055 0.126 0.334 0.124 86 0.098 0.061 0.042 0 87 0.087 0.05 0.334 0.111 88 0.086 0.025 0.334 0.109 89 0.183 0.075 0.099 0.018 90 0.141 0.049 0.334 0.123 91 0.168 0.075 0.334 0.126 92 0.041 0.017 0.334 0.104 93 -0.078 -0.135 -0.171 0.017 0.02 94 -0.076 -0.116 -0.144 0.064 0.023 95 -0.082 -0.144 -0.179 -0.012 0.02 96 -0.099 -0.158 -0.181 -0.05 0.022 97 -0.082 -0.14 -0.177 0.334 0.133 98 -0.036 -0.048 0.045 0.121 0.011 99 -0.028 -0.059 0.074 0.035 -0.002 100 -0.033 -0.072 0.064 -0.01 -0.003 101 -0.029 -0.068 0.067 0.334 0.11 102 -0.042 -0.044 0.134 0.039 0.011 103 -0.026 -0.032 0.154 0.053 0.011 104 -0.043 -0.054 0.129 0.334 0.122 105 -0.048 -0.085 0.012 -0.029 -0.006 106 -0.038 -0.074 0.021 0.334 0.106 107 -0.049 -0.09 -0.033 0.334 0.107 108 -0.046 -0.079 0.039 0.102 0.014 109 -0.045 -0.11 0.058 0.035 0.006 110 -0.04 -0.115 0.056 0.018 0.005 111 -0.046 -0.115 0.052 0.334 0.118 112 -0.052 -0.08 0.113 0.039 0.014 113 -0.034 -0.078 0.133 0.059 0.015 114 -0.052 -0.086 0.107 0.334 0.125 115 -0.051 -0.125 0.028 0.011 0.003 116 -0.054 -0.125 0.026 0.334 0.116 117 -0.053 -0.127 0.004 0.334 0.115 118 -0.03 0.059 0.132 0.057 0.012 119 -0.005 0.066 0.158 0.084 0.014 120 -0.03 0.052 0.128 0.334 0.122 121 -0.007 0.096 0.06 0.039 -0.003 122 -0.02 0.085 0.05 0.334 0.108 123 -0.013 0.083 0.021 0.334 0.106 124 -0.013 0.182 0.074 0.095 0.015 125 -0.038 0.143 0.05 0.334 0.122 126 -0.017 0.166 0.07 0.334 0.123 127 -0.027 0.04 0.009 0.334 0.101 128 -0.091 -0.112 0.03 0.071 0.018 129 -0.115 -0.145 0.034 0.001 0.014 130 -0.117 -0.146 0.033 -0.005 0.014 131 -0.115 -0.146 0.034 0.334 0.127 132 -0.094 -0.116 0.075 0.01 0.017 133 -0.081 -0.11 0.092 0.032 0.018 134 -0.097 -0.119 0.072 0.334 0.13 135 -0.132 -0.158 -0.014 -0.019 0.014 136 -0.125 -0.157 -0.008 0.334 0.126 137 -0.127 -0.155 -0.014 0.334 0.127 138 -0.03 0.059 0.123 0.049 0.012 139 -0.011 0.065 0.155 0.08 0.014 140 -0.043 0.051 0.118 0.334 0.123 141 -0.045 0.085 0.044 0.022 -0.002 142 -0.055 0.078 0.036 0.334 0.111 143 -0.062 0.072 0.004 0.334 0.11 144 0.012 0.189 0.08 0.106 0.015 145 -0.039 0.133 0.039 0.334 0.122 146 -0.024 0.158 0.065 0.334 0.123 147 -0.072 0.018 -0.009 0.334 0.105 148 -0.059 0.054 0.108 0.047 0.014 149 -0.061 0.058 0.135 0.08 0.017 150 -0.068 0.046 0.102 0.334 0.125 151 -0.1 0.076 0.048 0.044 0.006 152 -0.099 0.065 0.036 0.334 0.117 153 -0.106 0.065 0.031 0.334 0.117 154 -0.059 0.157 0.065 0.092 0.018 155 -0.069 0.114 0.039 0.334 0.124 156 -0.07 0.139 0.071 0.334 0.127 157 -0.116 0.031 0.026 0.334 0.114 158 0.083 0.175 0.09 0.117 0.021 159 0.063 0.129 0.057 0.334 0.124 160 0.067 0.158 0.085 0.334 0.127 161 0.098 0.061 0.042 0.334 0.109 162 0.183 0.075 0.099 0.334 0.128 163 -0.072 -0.112 -0.139 0.015 0.063 0.019 164 -0.079 -0.138 -0.174 0.015 -0.009 0.016 165 -0.1 -0.158 -0.182 -0.002 -0.05 0.018 166 -0.078 -0.135 -0.171 0.017 0.334 0.13 167 -0.075 -0.115 -0.143 0.065 0.003 0.019 168 -0.083 -0.127 -0.152 0.052 -0.018 0.019 169 -0.076 -0.116 -0.144 0.064 0.334 0.133 170 -0.106 -0.173 -0.19 -0.034 -0.063 0.019 171 -0.082 -0.144 -0.179 -0.012 0.334 0.13 172 -0.099 -0.158 -0.181 -0.05 0.334 0.132 173 -0.035 -0.035 0.053 0.125 0.048 0.01 174 -0.01 -0.015 0.063 0.153 0.075 0.011 175 -0.036 -0.048 0.045 0.121 0.334 0.121 176 -0.024 -0.054 0.077 0.038 0.009 -0.005 177 -0.028 -0.059 0.074 0.035 0.334 0.108 178 -0.033 -0.072 0.064 -0.01 0.334 0.107 179 -0.01 0.008 0.186 0.078 0.1 0.012 180 -0.042 -0.044 0.134 0.039 0.334 0.12 181 -0.026 -0.032 0.154 0.053 0.334 0.121 182 -0.048 -0.085 0.012 -0.029 0.334 0.104 183 -0.044 -0.07 0.046 0.107 0.046 0.012 184 -0.022 -0.067 0.053 0.131 0.072 0.014 185 -0.046 -0.079 0.039 0.102 0.334 0.124 186 -0.034 -0.108 0.067 0.044 0.032 0.003 187 -0.045 -0.11 0.058 0.035 0.334 0.116 188 -0.04 -0.115 0.056 0.018 0.334 0.115 189 -0.028 -0.066 0.152 0.062 0.082 0.015 190 -0.052 -0.08 0.113 0.039 0.334 0.124 191 -0.034 -0.078 0.133 0.059 0.334 0.125 192 -0.051 -0.125 0.028 0.011 0.334 0.113 193 0.004 0.083 0.176 0.091 0.118 0.018 194 -0.03 0.059 0.132 0.057 0.334 0.122 195 -0.005 0.066 0.158 0.084 0.334 0.124 196 -0.007 0.096 0.06 0.039 0.334 0.106 197 -0.013 0.182 0.074 0.095 0.334 0.125 198 -0.083 -0.105 0.035 0.076 0.018 0.015 199 -0.065 -0.095 0.042 0.098 0.046 0.016 200 -0.091 -0.112 0.03 0.071 0.334 0.128 201 -0.118 -0.146 0.032 -0.002 -0.006 0.011 202 -0.115 -0.145 0.034 0.001 0.334 0.124 203 -0.117 -0.146 0.033 -0.005 0.334 0.124 204 -0.053 -0.089 0.12 0.039 0.059 0.015 205 -0.094 -0.116 0.075 0.01 0.334 0.127 206 -0.081 -0.11 0.092 0.032 0.334 0.128 207 -0.132 -0.158 -0.014 -0.019 0.334 0.124 208 0.044 0.094 0.195 0.11 0.144 0.019 209 -0.03 0.059 0.123 0.049 0.334 0.122 210 -0.011 0.065 0.155 0.08 0.334 0.124 211 -0.045 0.085 0.044 0.022 0.334 0.108 212 0.012 0.189 0.08 0.106 0.334 0.125 213 -0.044 0.075 0.157 0.082 0.11 0.019 214 -0.059 0.054 0.108 0.047 0.334 0.124 215 -0.061 0.058 0.135 0.08 0.334 0.127 216 -0.1 0.076 0.048 0.044 0.334 0.116 217 -0.059 0.157 0.065 0.092 0.334 0.128 218 0.083 0.175 0.09 0.117 0.334 0.131 219 -0.071 -0.109 -0.136 0.017 0.065 0.007 0.016 220 -0.078 -0.12 -0.145 0.011 0.056 -0.011 0.016 221 -0.072 -0.112 -0.139 0.015 0.063 0.334 0.13 222 -0.117 -0.188 -0.201 -0.024 -0.045 -0.077 0.016 223 -0.079 -0.138 -0.174 0.015 -0.009 0.334 0.127 224 -0.1 -0.158 -0.182 -0.002 -0.05 0.334 0.128 225 -0.09 -0.142 -0.163 0.038 -0.014 -0.032 0.016 226 -0.075 -0.115 -0.143 0.065 0.003 0.334 0.13 227 -0.083 -0.127 -0.152 0.052 -0.018 0.334 0.13 228 -0.106 -0.173 -0.19 -0.034 -0.063 0.334 0.129 229 0.023 0.056 0.101 0.201 0.118 0.16 0.016 230 -0.035 -0.035 0.053 0.125 0.048 0.334 0.12 231 -0.01 -0.015 0.063 0.153 0.075 0.334 0.121 232 -0.024 -0.054 0.077 0.038 0.009 0.334 0.105 233 -0.01 0.008 0.186 0.078 0.1 0.334 0.122 234 -0.009 -0.046 0.072 0.155 0.08 0.106 0.016 235 -0.044 -0.07 0.046 0.107 0.046 0.334 0.123 236 -0.022 -0.067 0.053 0.131 0.072 0.334 0.124 237 -0.034 -0.108 0.067 0.044 0.032 0.334 0.114 238 -0.028 -0.066 0.152 0.062 0.082 0.334 0.125 239 0.004 0.083 0.176 0.091 0.118 0.334 0.128 240 0.015 -0.034 0.08 0.168 0.09 0.121 0.016 241 -0.083 -0.105 0.035 0.076 0.018 0.334 0.125 242 -0.065 -0.095 0.042 0.098 0.046 0.334 0.126 243 -0.118 -0.146 0.032 -0.002 -0.006 0.334 0.121 244 -0.053 -0.089 0.12 0.039 0.059 0.334 0.126 245 0.044 0.094 0.195 0.11 0.144 0.334 0.129 246 -0.044 0.075 0.157 0.082 0.11 0.334 0.13 247 -0.071 -0.109 -0.136 0.017 0.065 0.007 0.016 248 -0.071 -0.109 -0.136 0.017 0.065 0.007 0.334 0.127 249 -0.078 -0.12 -0.145 0.011 0.056 -0.011 0.334 0.127 250 -0.117 -0.188 -0.201 -0.024 -0.045 -0.077 0.334 0.127 251 -0.09 -0.142 -0.163 0.038 -0.014 -0.032 0.334 0.127 252 0.023 0.056 0.101 0.201 0.118 0.16 0.334 0.127 253 -0.009 -0.046 0.072 0.155 0.08 0.106 0.334 0.127 254 0.015 -0.034 0.08 0.168 0.09 0.121 0.334 0.127 255 -0.071 -0.109 -0.136 0.017 0.065 0.007 0.334 0.127 [Bonus points to whoever can explain why the last ari_cor has a missing value in the last model. I checked. It is not a problem with my function. I don't know.]

So Timofey Pnin is right. The beta does stay exactly the same across models, at least two 3 digits.

We may also note that adding the other predictors did not have much effect: g alone (model #8) R2 adj. = .108, best model according to R2 adj. = 0.132 (#97). Notice how this model has negative betas for the other items. In other words, one is better off with lower scores. Surely that can't be right. It is probably just a fluke due to overfitting...

Testing overfitting

We can test overfitting using lasso regression (read this book, seriously, it's a great book!). Because lasso regression is indeterministic, we repeat it a large number of times and examine the overall results.

#lasso regression

fits_2 = MOD_repeat_cv_glmnet(df = w_res,

dependent = "GPA",

predictors = colnames(w_res)[-9],

runs = 500)

write_clipboard(MOD_summarize_models(fits_2))Lasso results ravenscore lre_cor nse_cor voc_cor van_cor hfi_cor ari_cor g mean 0 0 0 0 0 0 0 0.096 median 0 0 0 0 0 0 0 0.104 sd 0 0 0 0 0.001 0 0 0.033 fraction_zeroNA 1 1 1 1 0.996 1 1 0.01

The lasso confirms our suspicions. The non-g variables were fairly useless, their apparent usefulness due to overfitting. g retained its usefulness in 99% of the runs. The most promising of the other candidates was only useful in .04% of runs.

This is probably worth writing into a short paper. Contact me if you are willing to do this. I will help you, but I don't have time to write it all myself.