Does intelligence have nonlinear effects on political opinions?

Yes, and the shape of the function differs wildly across beliefs

Back in March I published this study:

Kirkegaard, E. O. W. (2025). Does intelligence have nonlinear effects on political opinions? OpenPsych, 1(1). https://doi.org/10.26775/OP.2025.03.24

We sought to study intelligence’s relationship with political opinions with particular interest in nonlinear associations. We surveyed 1,003 American adults using Prolific, where we measured English vocabulary ability, as well as 26 political opinions. We find that intelligence has detectable linear associations with most political opinions (21 of 26). Using natural splines and the multivariate adaptive regression splines (MARS) algorithm, we find evidence of nonlinear effects on both individual opinions (15 of 26) and a general conservatism score. Specifically, the association between intelligence and conservatism is mainly negative, but becomes positive in the right tail.

The background of the study is the ever-present discussions about which beliefs relate to good and bad personality traits. Traditionally, academic political psychology has been concerned with proving that American conservatives are worse people in a variety of ways: higher in dogmatism, lower in open-mindedness, higher in authoritarianism, less trusting of science, and, of course, more x-ist and x-phobic. I reviewed a book by Michael Ryan, who takes all of these for granted, and proposes a 'conservatives are archaic sub-humans' model (before advocating an authoritarian world socialist government). The latest addition to this game comes not from the academics as much as it comes from online commentators. The goal is prove that conservative beliefs correlate negatively with intelligence, and that supporters of the Republican party are stupid. Richard Hanania has been going on about this for years. There are two main problems with the data used to reach this conclusion. First, most results come from analyses of the GSS and ANES datasets, or from more anthropological observations (reading habits [debunked for UK], scams [Trumpcoin etc.], bad websites with bad ads). These two datasets only have a 10-item vocabulary test, so the measure of intelligence is unsatisfactory in terms of range and reliability. Second, both of those datasets are American, so if anything, one could prove that American conservatives are less intelligent, but maybe British conservatives are bright, and the German ones mediocre. Furthermore, the gaps between party supporters change over the years depending on which party is the elite at that time and which one is the populist. Right now the Republicans are populists and Democrats are elites, so the intelligence relationship to party allegiance has unsurprisingly changed in tandem. Finally, if we ignore the time aspect of the associations, and just look at ideological self-classification since the 1970s to the present, we get this:

The difference is 1 IQ or so. Not worth caring much about, smaller than the sex difference in intelligence.

However, might it be that intelligence has nonlinear relationships to political beliefs? While mid-high intelligence zones of the internet are very leftist (e.g. Reddit, political debates on social media), on the right tail, it is less clear. The 10-item vocabulary test in the GSS does not measure above ~120 IQ (10/10 correct), so it is not possible to answer the question of what nonlinearities may exist on the right tail. The study was designed to throw some light on this question.

The data are 2 waves of Americans from Prolific, using variations of their representative sampling methods, for a total of n = 958 people with data. The intelligence data was again a vocabulary test. That is because we were collecting validation and norm data for a new vocabulary scale and decided to attach some political questions to the end so as to make this study possible. The full test has 232 items, so the reliability was extremely high (rxx > 0.95), even for the right-tail. Note that the 2nd half of the sample was only given 50 vocabulary questions, but that abbreviated test was also very reliable:

The linear associations between vocabulary score and political opinions were these:

After adjustments for age, sex, and ethnicity, there are some fairly typical positive associations between vocabulary score and more left-wing beliefs. For instance, beta = 0.24 for pro-more-immigration, beta = -0.22 for less regulation. Looking around, there are only a few beliefs that break this pattern, such as the positive beta of 0.16 for pro-nuclear power.

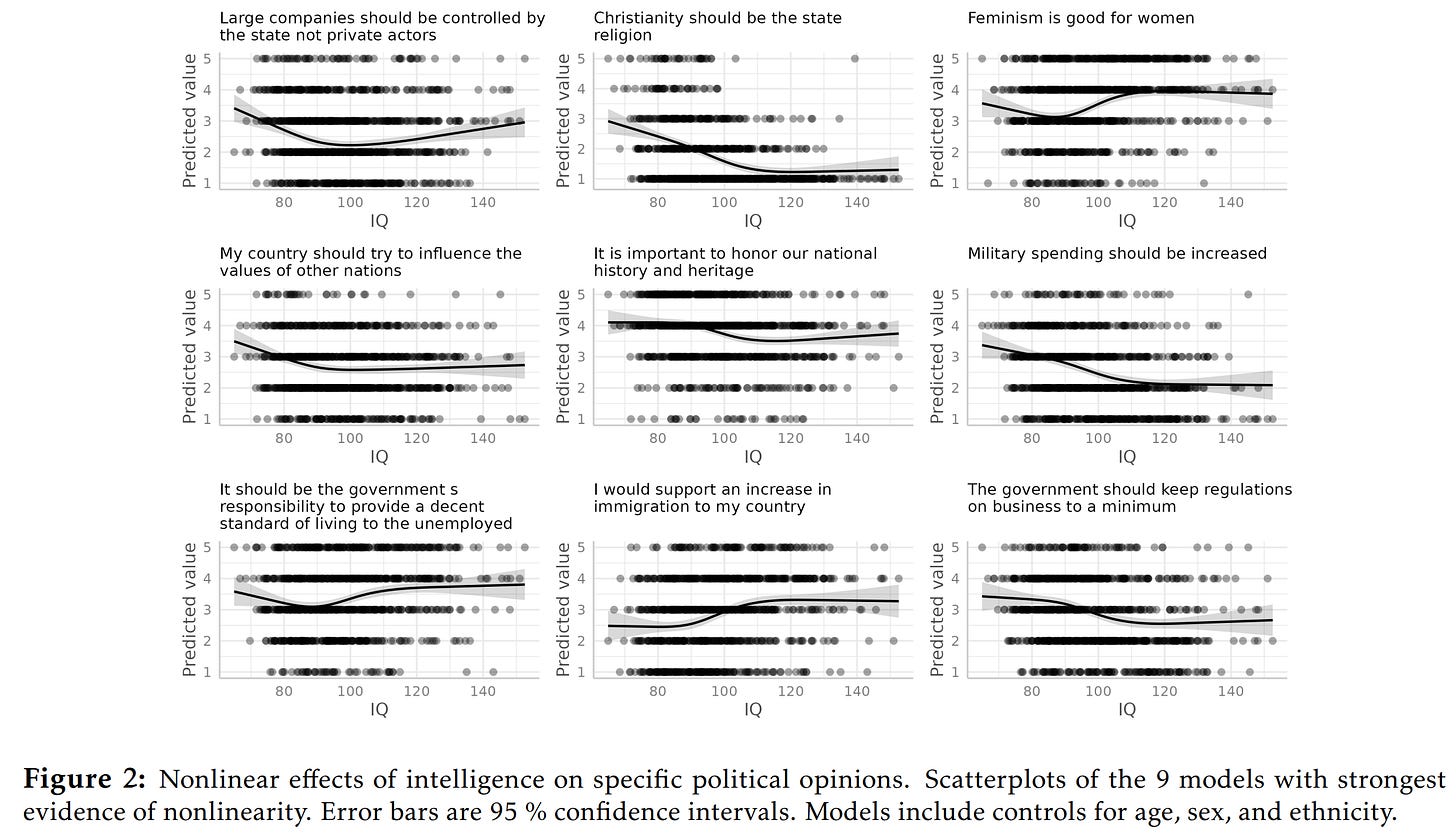

What about any nonlinear effects? They turn out to be rather common: Of the 26 beliefs, 15 of the 26 (58 %) models showed evidence (p < .05) of nonlinear effects. Given chance only, this value is expected to be 5% (1.3 beliefs out of 26). Using strict multiple testing correction (Bonferroni), 5 of the 26 beliefs had nonlinear patterns (p < .05/26). The most nonlinear ones are shown in the plot above. Amusingly, we get a midwit pattern for governments running large businesses, with both the low and high intelligence people being in favor of this (oh dear). For others, we get flat patterns, such as whether feminism is good for women, which shows a flat association after 110 IQ.

It may be more interesting to look at the overall pattern, and for this, we need to create an overall conservatism (rightism) score. The factor loadings are as follows:

The factors loadings were pretty much in line with expectations, with the amusing finding that sexual puritanicalism had a loading of 0. It looks like if one wants to ask only a few questions in the USA right now to make a solid guess about a person's overall distribution of political opinions, one should ask them about abortion and income redistributionism (absolute loadings about 0.80). If we predict this conservatism score from vocabulary score and demographic controls, we get this pattern:

The main association is negative, beta = -0.22, but it is nonlinear. The negative value comes from the decline in conservatism starting around 95 IQ but ending around 120 IQ. The overall nonlinear pattern is far beyond chance (p's 0.0012 and 0.0008, depending on how many splines are specified). However, just because a nonlinearity is detected does not mean it is detected in the right tail. As far as I know, there is no analytic statistical test for the monotonicness of a spline function.

So the question is: how reliable is that right-tail pattern? I had the same worry. When I initially did this study, I only had ~500 subjects and I found this pattern. To test whether this pattern was real, I collected another ~500 people, and thus the total sample size of about ~1000. This pattern is seen in both of the independent samples. This was a direct replication.

Next up, I tried another function for modeling nonlinearity (thin plate spline instead of natural spline), but this only made it clearer:

Next up was a cruder method. Can we subset the dataset such that after a given IQ threshold, the relationship is positive and p < .05? It turns out the answer is sort of:

The X axis value is the threshold used for inclusion. Thus, if we include almost the entire sample (80+ threshold), the linear beta is quite negative (-0.23, Y axis value). However, after thresholds of about 92 IQ, the beta gets progressively more positive. If we use only the cases with IQ 117+, then the beta becomes 0.35! The p-value for this trend is 0.073 (numbers shown on points) which is not impressive, but nevertheless, is suggestive considering this was a directional test (meaning the p-value should be halved).

What about using more dimensions of political ideology? One reviewer aptly pointed out that I had written a blogpost saying that political is not uni-dimensional, but here I am analyzing the data assuming that (bad Emil). OK, so I extracted a bunch of more factor solutions with 2-5 factors (correlated factors, oblimin). The 5th factor was a low data-quality factor, but the others were interpretable:

It turns out that the first factor was still a general factor and was almost entirely consistent across these analyses (r's 0.96 to 1.00). Furthermore, nonlinear patterns were detected of intelligence on these scores as well. Some of these minor factors also showed a similar non-monotonic pattern, like the one for Christianity:

What do we make of this?

First off, you need a long intelligence test to assess the right tail. This study had an unusually reliable test for the right tail. However, you also need quite a large sample size. Despite gathering another 500 people to reach nearly 1000 subjects, the study was still somewhat underpowered to see the exact pattern on the right tail. Maybe we should collect another 1000 samples, perhaps in the UK. Prolific unfortunately does not offer demographically representative data from other countries. I havne't found another data seller that offers high quality data for other countries.

The typical elite human capital and midwit memes are sort of supported by the results. It may be that the right tail of intelligence has different opinions than what is apparent from correlations and linear regressions.

(Possibly the first study to include this meme.)

From a broader political perspective, it is less clear what to make of correlations between intelligence and some political belief. Generally, we know that smarter people have more accurate beliefs about most things (hence, knowledge tests being highly g-loaded). However, they are perhaps also more likely to follow belief fashions and adopt the current thing beliefs, especially if this is good for social status signaling (pronouns, trans and Ukraine flags in bio). I am therefore not so inclined to think that we can simply infer what the best policy is based on looking at what right-tail intelligence people think. However, I think it's probably a good starting point. If smart people overwhelmingly agree on some policy, then there's a good chance it is optimal. If you are looking for more anti-EHC takes, Ubersoy has a critical blogpost.

I predict that wordcels favor some left-wing economic policies in pro-equity societies, but that intelligent people with nonverbal tilt typically favor more free market beliefs regardless of society.

Thanks for the interesting article. As always, I am leery of using vocabulary as a proxy for intelligence. More inclusive research using a standard IQ test would be more telling than using only the verbal proficiency part.