Is ability to read minds an aspect of general intelligence?

Yes, but

You have maybe seen this test making the rounds on Twitter:

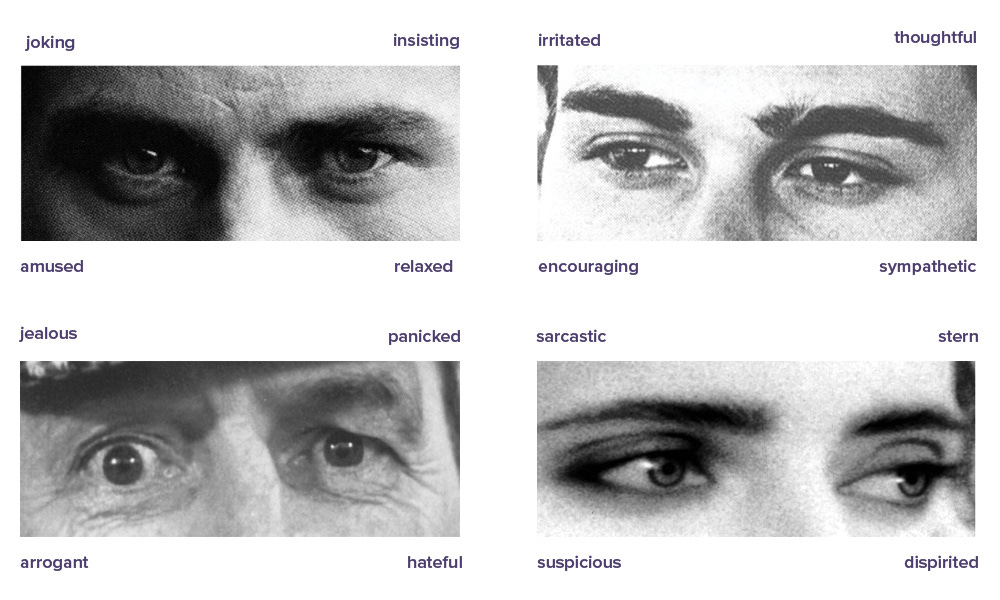

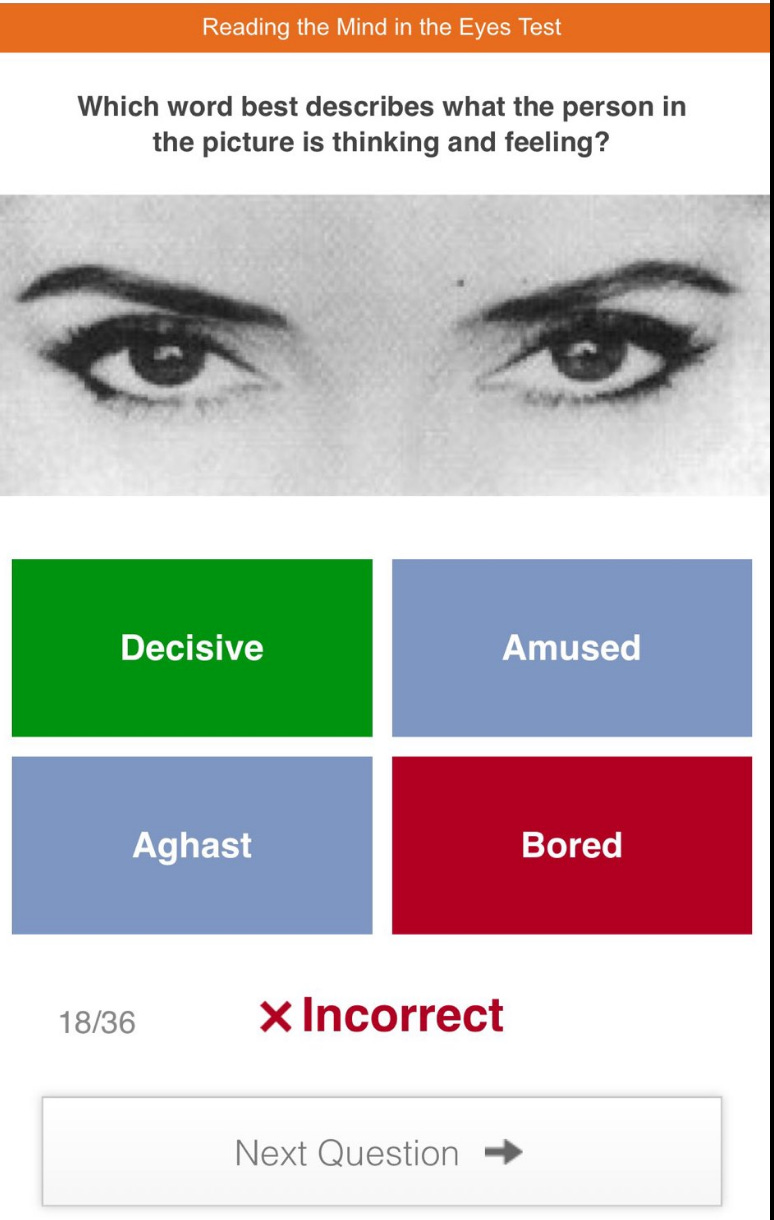

It's called the Reading the Mind in the Eyes Task (RMET). The subject is given 36 such grey-tone images of eyes and asked which of 4 emotions the person is experiencing:

In fact, you can take it right here for free.

The test is commonly interpreted as a measure of empathy in the Paul Bloom sense of being able to infer mental state or simulate another person's mental state. Alternatively, one could think of it as a reverse autism test (indeed, autists score much lower, 1.15 standard deviations even controlling for IQ). There are some reasons to doubt this conclusion, however. First, many of the words used in the test are difficult and would be failed on a vocabulary test by many native speakers of English. Words such as aghast and despondent. As such, the this will necessarily have some level of English ability, and thus intelligence loading. This effect will be larger if the subjects are non-native speakers of English. Second, aside from the language use, every mental test ever invented has some correlation with general intelligence (called g). That's why it's so, well, general. Over many decades, many many attempts have been made to find cognitive abilities that were not related to g, but all such attempts failed. A related prior attempt was memory of faces. Everybody knows someone who is bright but who is terrible at remembering faces. Humans seem to have specifically evolved mental capabilities for dealing with our complex social behavior, so it makes sense from an evolutionary psychological perspective to posit a kind of specialized ability for this task. However, when a meta-analysis of such studies was carried out, the correlation turned out to be about .34.

So what about this mind in the eyes test? There is a 2014 meta-analysis of its relationship to intelligence:

Although the Reading the Mind in the Eyes Test (RMET; Baron-Cohen et al. 1997, 2001) has been used as a measure of mental state understanding in over 250 studies, the extent to which it correlates with intelligence is seldom considered. We conducted a meta-analysis to investigate whether or not a relationship exists between intelligence and performance on the RMET. The analysis of 77 effects sizes with 3583 participants revealed a small positive correlation (r = .24) with no difference between verbal and performance abilities. We conclude that intelligence does play a significant role in performance on the RMET and that verbal and performance abilities contribute to this relationship equally. We discuss these findings in the context of the theory of mind and domain-general resources literature.

So overall a correlation of .24 was found. There was no correction for reliability, as the authors note:

This estimate of the effect must be considered conservative (i.e., the true correlation would be higher if the RMET had high reliability), since this meta-analysis does not correct for attenuating factors that may reduce the magnitude of the true effect size (Hunter & Schmidt, 2004). In particular, it is likely to be attenuated by the imperfect reliability of the tasks involved. While many intelligence tests have high reliability, the reliability of the RMET is not known, but when measured, it has been low (e.g., Mar et al., 2006; Meyer & Shean, 2006). Thus, our effect size likely underestimates the true effect (Hunter & Schmidt, 2004).

Contrary to what the authors say, I found a number of reliability estimates:

155 German students, 3 weeks interval, r = .68, 24-item version.

56 Swedish students, 3 weeks interval, r = .60, 24-item version.

There are many studies, but results were consistent enough.

So it seems the test is not that reliable despite having 32 items. (I find this odd because I took this test many times over a decade, and my score is always around 30-33 out of 36). Anyway, let's say the retest reliability is about .65. What then would the correlation to intelligence be, corrected for imperfect reliability? It's a matter of plugging the values into an R function (e.g. correct.cor() in psych). If we assume a reliability of .90 for measured intelligence in these studies and .65 for the RMET, then the corrected correlation is about .31. This is very similar to the face recognition correlation.

What about group differences?

A massive 2018 study looked into these:

Methods In total, 40 248 international, native-/primarily English-speaking participants between the ages of 10 and 70 completed one of five measures on TestMyBrain.org: RMET, a shortened version of RMET, a multiracial emotion identification task, an emotion discrimination task, and a non-social/non-verbal processing speed task (digit symbol matching).

Results Contrary to other tasks, performance on the RMET increased across the lifespan. Education, race, and ethnicity explained more variance in RMET performance than the other tasks, and differences between levels of education, race, and ethnicity were more pronounced for the RMET than the other tasks such that more highly educated, non-Hispanic, and White/Caucasian individuals performed best.

They charge the test with bias, as they found that it correlates more with education and race than it does with the other tests of emotional ability. Specifically:

Further, the differences between European/White v. non-European/non-White backgrounds differed by task. Specifically, in the RMET-36, European/White participants outperformed all other groups, ( p's < 0.001); an effect replicated in the RMET-16 dataset ( ps < 0.001). These differences were moderate-to-large in size, RMET-36 range d = 0.55–0.75, CLES = 65.02–70.32%, RMET-16 range d = 0.56–1.00, CLES = 65.34–76.09%, with largest differences between African/Black and European/White participants. In contrast, on other tasks, European/White participants less consistently outperformed non-European/non-White participants

Given that we found above that it's an intelligence test, though a poor one, group differences are expected. However, the gaps are too large to be explained by intelligence differences. If we assumed a 1 standard deviation advantage of Europeans over Africans, and then the expected gap should be about 0.30 d. However, instead the gap is about 0.60 d. Given that all the people in the photos are Europeans, the larger than expected gap is perhaps because Europeans are better able to tell what other Europeans are feeling, a kind of ethnic accuracy boost. An alternative possibility is that Europeans really just are better at reading minds in the eye in general. We don't know from these data to which extent these two possibilities explain the gap, but I would guess it's mainly the former. Furthermore, the fact that the education correlation is also large suggests that the correlation with intelligence and maybe verbal ability (due to vocabulary) has been underestimated. Perhaps this is due using student samples, and restriction of range, so perhaps the true correlation with intelligence is closer to r = .40.

Whatever the case may be exactly, the reading minds in the eye test clearly has a moderate relationship to intelligence, likely has pro-European bias, and it's also not very reliable on top of this. So while it's a very cool idea for a test, it's hard to say that this test is awesome. Maybe someone can generate some more eye images with specific emotions using LLMs and make a better test.

What does this mean for the claim that men's g-factor is higher than women's, that I think you mentioned some time ago?

According to a cursory search, it looks like women have been doing consistently better at mind-reading than men, with effect sizes around 0.2:

https://doi.org/10.1016/j.cpnec.2022.100162

https://psycnet.apa.org/record/2013-09240-009

https://www.pnas.org/doi/abs/10.1073/pnas.2022385119

Here's my interpretation, correct me if I'm wrong:

1. The g-factor and g-loadings are calculated by factor analysis based on a collection of various cognitive tasks

2. These collections typically *do not* include mind-reading tasks (even though mind-reading is arguably a cognitive task, what else could it be?)

3. If we were to include the RMET (and similar emotion-related tasks) into the datasets used to calibrate the g-factor, that would rotate the vector a little bit, and slightly change the loadings of the different tasks

4. That would slightly increase the average IQ score of women and decrease the average of men, possibly explaining a part of the gender gap

5. That may or may not improve the accuracy of IQ scores for predicting job performance/anti-social behaviour/etc (I suspect that recognizing emotions is relevant for job performance, at least in some jobs)

Am I understanding this correctly?

I always wondered how the "correct" emotion of the eyes was determined by the test's creators. A poll? Interviews with the subjects in the photos?