Military intelligence and racial discrimination

US Naval Academy's folly

By now, you've probably heard that the US military, and many other militaries around the world, has some kind of intelligence test that they use for admission. The US even has a law banning the hiring or conscription of soldiers below a certain threshold:

10 U.S. Code § 520 - Limitation on enlistment and induction of persons whose score on the Armed Forces Qualification Test is below a prescribed level

(a) (1) The number of persons originally enlisted or inducted to serve on active duty (other than active duty for training) in any armed force during any fiscal year whose score on the Armed Forces Qualification Test is at or above the tenth percentile and below the thirty-first percentile may not exceed 4 percent of the total number of persons originally enlisted or inducted to serve on active duty (other than active duty for training) in such armed force during such fiscal year.

(2) Upon the request of the Secretary concerned, the Secretary of Defense may authorize an armed force to increase the limitation specified in paragraph (1) to not exceed 20 percent of the total number of persons originally enlisted or inducted to serve on active duty (other than active duty for training) in such armed force during such fiscal year. The Secretary of Defense shall notify the Committees on Armed Services of the Senate and the House of Representatives not later than 30 days after using such authority.

(b) A person who is not a high school graduate may not be accepted for enlistment in the armed forces unless the score of that person on the Armed Forces Qualification Test is at or above the thirty-first percentile; however, a person may not be denied enlistment in the armed forces solely because of his not having a high school diploma if his enlistment is needed to meet established strength requirements.

Depending on how they norm the test (to general population or to Whites), the 10th centile corresponds to about 81 IQ and 31st centile is about 93 IQ (slightly lower if using total population norms).

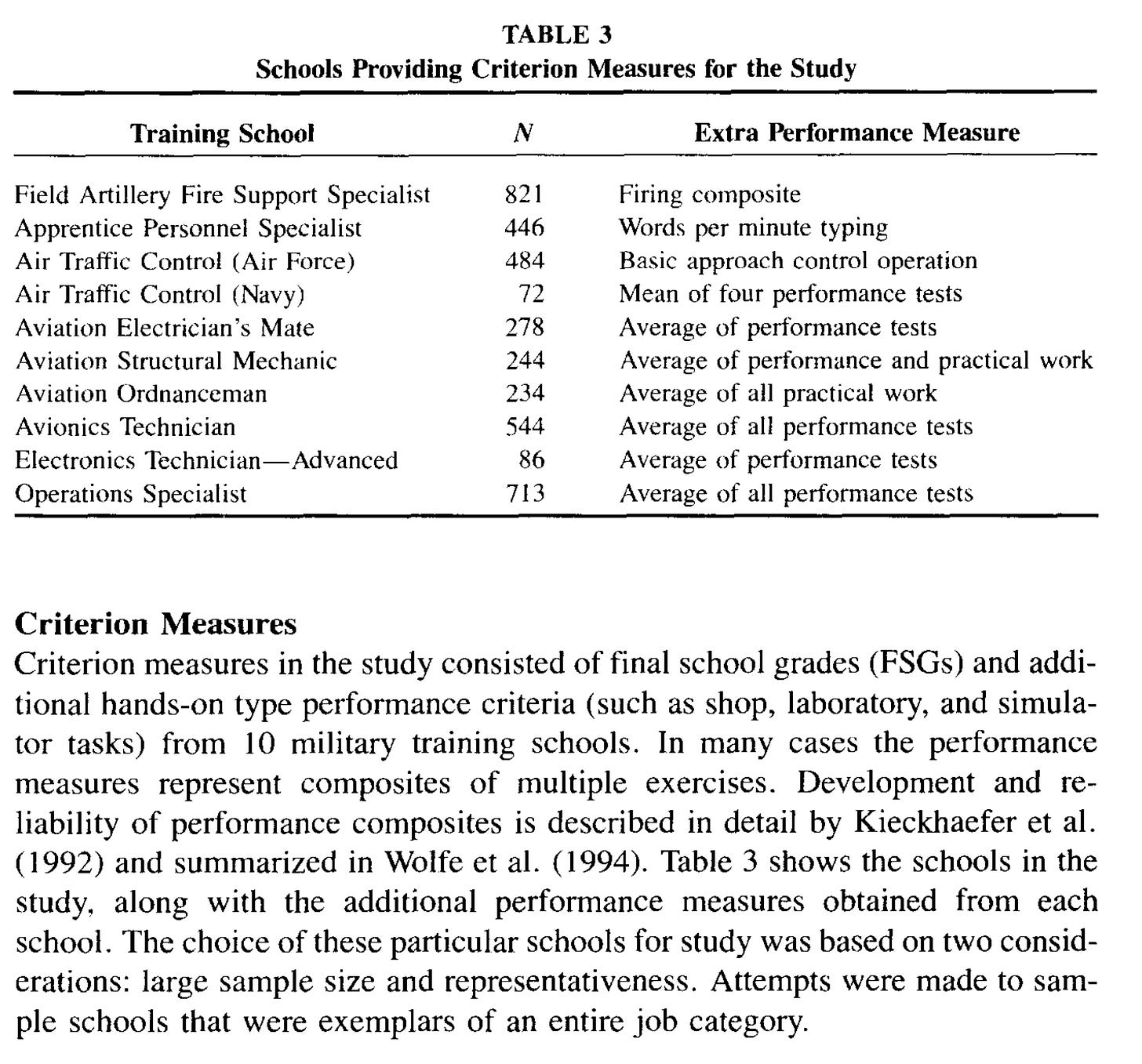

The US army occasionally does a big validation study looking at how well their tests predict performance and to see whether they can be improved in some way, usually by adding more specific tests or personality evaluations. To take one typical study of this kind, in 1995 Larson and Wolfe compared the existing ASVAB tests to a new set of tests they tried:

The ASVAB tests (AFQT) you may be familiar with because these are the same tests used in the NLSY 1979 and 1997 datasets that are so commonly used in intelligence research. The ECAT is their new test set of tests (Enhanced Computer-Administered Tests). The sample sizes (~4000 in this case) and data about job performance in military roles are usually excellent:

And the results:

In this case, they used a simple approach. Calculate the overall score from both batteries. This approach doesn't squeeze out the maximum validity of the tests because it only uses the validity from the enhanced measurement of g by using a more diverse set of tests. If one wanted to squeeze out the maximum validity, one would use multiple regression with each test as the predictor (ideally, each item on the tests). Still, they observed validity of 0.77 for final school grades in the military academy, and 0.58/0.62 in the larger sample with specific performance tests are very high. The new set of tests perhaps produced a gain of 0.04 r (6%), which if this is beyond chance, could be worth the effort (you only have to test people once).

Why bring this up? Well, because Zach Goldberg published a landmark study of how the US selects students for its military: After Harvard: The Fight Against Race-Based Admissions at the US Naval Academy. You may have heard of the Students for Fair Admissions v. Harvard lawsuit that resulted in a loss for Harvard and found that:

On June 29, 2023, the Supreme Court issued a decision in Harvard that, by a vote of 6–2, reversed the lower court ruling. In the majority opinion, Chief Justice John Roberts held that affirmative action in college admissions is unconstitutional. Because of the absence of U.S. military academies in the cases, the lack of relevant lower court rulings, and the potentially distinct interests that the military academies may present, the Court, limited by Article III, did not decide the fate of race-based affirmative action in military academies.[13][14]

The lawsuit took 10 years to get from the lower courts to the supreme court and overruled prior rulings on the use of race in admissions to make sure that it is definitely, really, for real, banned this time. This was one of those cases that came after Trump 1.0 added more conservative judges to the court. The lawsuit that Goldberg wrote about was a parallel lawsuit that also involved the same plaintiff but against a different defendant: the US naval academy:

The United States Naval Academy (USNA, Navy, or Annapolis) is a federal service academy in Annapolis, Maryland. It was established on 10 October 1845 during the tenure of George Bancroft as Secretary of the Navy. The Naval Academy is the second oldest of the five U.S. service academies and it educates midshipmen for service in the officer corps of the United States Navy and United States Marine Corps. It is part of the Naval University System. The 338-acre (137 ha) campus is located on the former grounds of Fort Severn at the confluence of the Severn River and Chesapeake Bay in Anne Arundel County, 33 miles (53 km) east of Washington, D.C., and 26 miles (42 km) southeast of Baltimore. The entire campus, known colloquially as the Yard, is a National Historic Landmark and home to many historic sites, buildings, and monuments. It replaced Philadelphia Naval Asylum in Philadelphia that had served as the first United States Naval Academy from 1838 to 1845 when the Naval Academy formed in Annapolis.[4]

The case was decided a year after the Harvard case, but resulted in a win for the academy despite the situation being roughly the same. During the discovery phase, a lot of internal data was made public, so that everybody can see just how extreme the racial discrimination was. First, the admission rates (% of those who applied who are accepted):

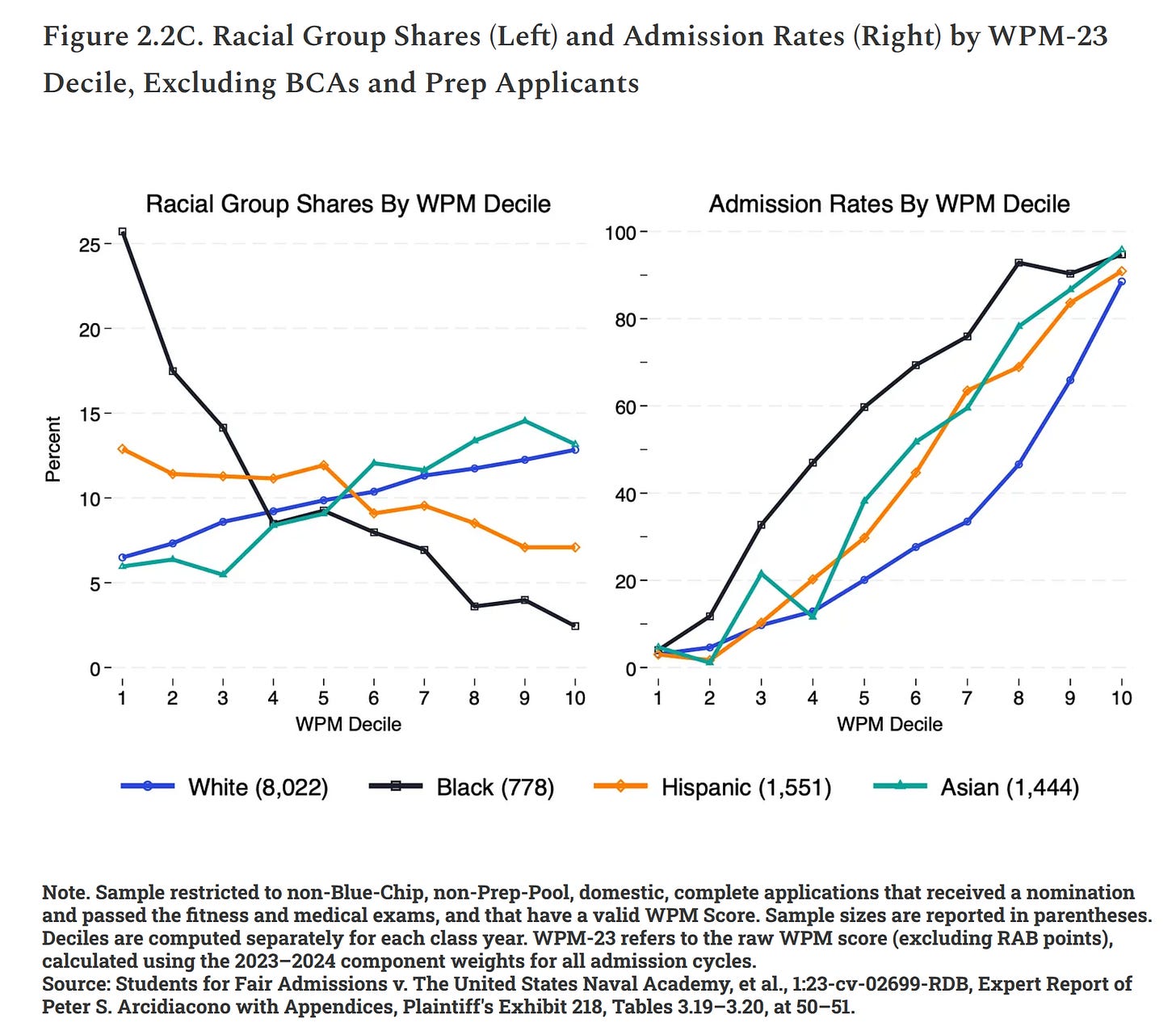

Admission rates themselves do not say much about discrimination, as the rates of application by race may differ and the applicants may differ in relevant factors that affect admission. So let's look at the applicants and their scores:

The WPM is:

Once an applicant’s file is submitted, it is evaluated using the Whole Person Multiple (WPM) score, a composite metric calculated through an algorithm based on USNA’s weighting formula. Before the class of 2025 admissions cycle, the WPM score was determined by an applicant’s highest SAT math and verbal scores, high school class rank, rankings of athletic and non-athletic extracurricular activities, and character-related appraisals from math and English teachers (e.g., “Makes friends easily”). However, after adopting its “test-flexible” policy, USNA removed standardized test scores from the WPM calculation, redistributing their weight to class rank and extracurricular activities.5

In other words, WPM is their composite score for each person based on the criteria they select on. Depending on the distributions and the manner of selection, the WPM means should be mostly even after selection, though not entirely. Top down selection is the most meritocratic method, as it maximizes the talent in the accepted candidates. In this method, you start ranking all applicants on your composite, then admit people from the top down until you run out of slots. If someone rejects the offer, you offer the spot to the next person and so on. In the plot, we see that the Asian applicants had the best average WPM, and Blacks the worst, and among the accepted candidates, Whites had the highest WPM and Blacks the worst. This doesn't exactly tell us about whether there is any bias either. We have to look at the probability of acceptance given a certain score by race:

In this figure, the racial discrimination is clearly visible. A slightly below average applicant (decile 5) has about 60% chance of being accepted if he is Black, but only 20% if he is White, and the other groups are in between (Hispanic 30%, Asian 38%). Clearly, the admissions officers are trying to increase the numbers of non-Whites at the cost of Whites' chances.

Adding this to the above, we know that the US Naval Academy has been consciously decreasing the performance of the US military to gain some diversity points. I doubt America's enemies would engage in such foolish hiring practices. This might also have contributed to the decline in applicants to the military in general, as White conservatives are historically the main contributor to the US military, and they don't want to fight for great Woke (Who wants to fight for their country?). We also know from practical experience that admitting subpar candidates to the military results in worse outcomes. In the most famous case, during the Vietnam war (1966-1971), the US military tried lowering the standards to get more cannon fodder recruits. The person responsible for this was Robert McNamara, and the recruits were given various names such as McNamara's Morons and McNamara's Misfits that give you an idea of how they fared. There's a book about this, McNamara's Folly: The Use of Low-IQ Troops in the Vietnam War, but I haven't read it yet. Gwern has an in depth summary of the consequences of admitting subpar candaidates.

The report goes into much more details, with extensive analysis of the arguments presented during the trial and the judge's conclusion. It certainly deserves a wider readership, which is one of the reasons why I am promoting it here. So jump over to Zach's Substack and have a look around.

Emil et al--I started my career in 1971 with a large state in the US doing research on civil service tests. hired as a result of the Griggs vs Duke power SC case. test dev, validation studies etc. for several years before moving. nothing has changed. certain minorities score lower on so many measures and organizations create all sorts of verbiage and slight of hand to increase the selection of certain minorities who otherwise simply don't score well. we were doing what was called "differential validity" studies. ie, were differences in test scores associated with differences in job performance? yes, sometimes. don't know how further research has turned out as I went on to other areas. for me, The Global Bell Curve (Flynn) says it all.

MacNamara huh? The asshole that just keeps on giving. There is a very special place in Hell for that one.