Reverse publication bias: a collection

See also Sesardić's conjecture, a related idea.

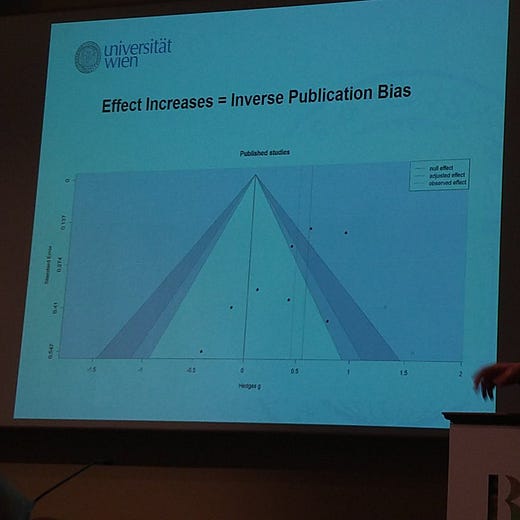

Publication bias as normally considered is really positive publication bias, i.e., the bias is away from zero, towards finding larger than reality results. There is another form, however, more rare, called reverse publication bias, or negative publication bias, where published results are biased towards the null. This pattern results from researchers' own biases towards having something noteworthy to report, wanting to publish it, and getting through peer and editorial review. All of these things are related to political ideology of the researchers and their academic environment (self-censorship). Thus, due to the massive political ideology skew in social science, we expect:

Positive publication bias for left-wing friendly and results in general

Negative/reverse publication bias for results that left-wing unfriendly results

Below I list the examples I know of.

GPA and intelligence

Roth, B., Becker, N., Romeyke, S., Schäfer, S., Domnick, F., & Spinath, F. M. (2015). Intelligence and school grades: A meta-analysis. Intelligence, 53, 118-137.

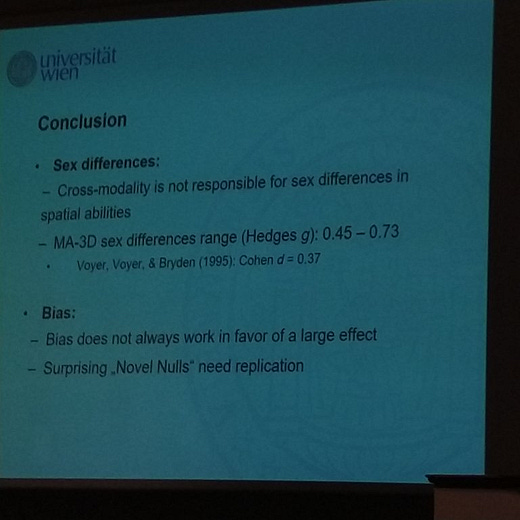

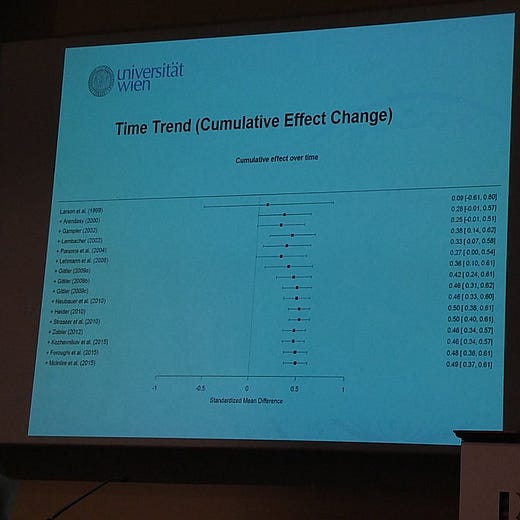

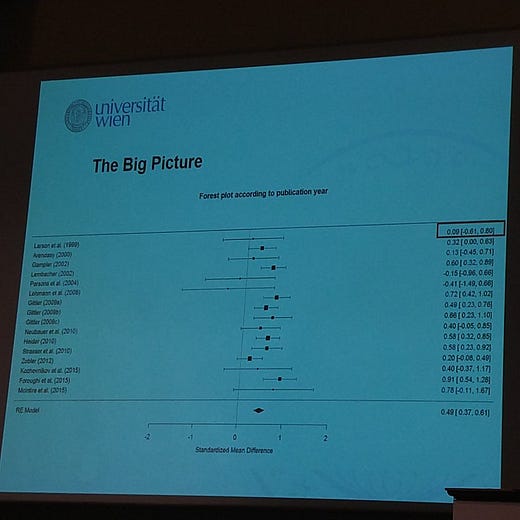

Sex difference in spatial ability

This study was still unpublished when I asked the Jakob about it Apr 11, 2019.

Race difference in personality (OCEAN)

Tate, B. W., & Mcdaniel, M. A. (2008). Race differences in personality: An evaluation of moderators and publication bias. Preprint from before preprints were cool.

Other

One can find recent discussions by searching Twitter: reverse publication bias or more strict with quotes "reverse publication bias".

A bit of a side-interest is when people are not trying to suppress some finding, but just want to avoid the result of a statistical test of an assumption being violated. This can be from testing means in post-randomization groups (these are hopefully all false positives that should happen 5% of the time), or testing for model assumptions (e.g. normal distribution). There's a lot of these kind of meta papers, two examples:

Chuard, P. J., Vrtílek, M., Head, M. L., & Jennions, M. D. (2019). Evidence that nonsignificant results are sometimes preferred: Reverse P-hacking or selective reporting?. PLoS biology, 17(1), e3000127.

Snyder, C., & Zhuo, R. (2018). Sniff Tests in Economics: Aggregate Distribution of Their Probability Values and Implications for Publication Bias (No. w25058). National Bureau of Economic Research.

These papers concern test of concern in studies, not tests for main findings that are undesired.

I first heard of this concept in ~2012 from Neuroskeptic:

Some have also tried to explain away replication failures as being a kind of reverse p-hacking: