Scientific misconduct by ancestry/country

There's a few studies on this already. This is a rare event/person situation, so sampling error is large and problematic (cf. discussion in esports paper). Ignoring this, we can draw some general conclusions by a quick literature survey.

Oransky, I. (2018). Volunteer watchdogs pushed a small country up the rankings. Science.

Based on data from RetractionWatch database. This is somewhat wrong approach because a lot of fraud committed in Western countries is done by foreign researchers. One needs to do the analyses by name and inferring the ancestry of the persons by building a machine learning model off data from e.g. behindthename.com (see my prior study on first names). As I noted on Twitter, in Denmark, most famous faker is Milena Penkowa. That doesn't sound very Danish, she is actually half Bulgarian.

If we look at the top list of fraudsters in RW database:

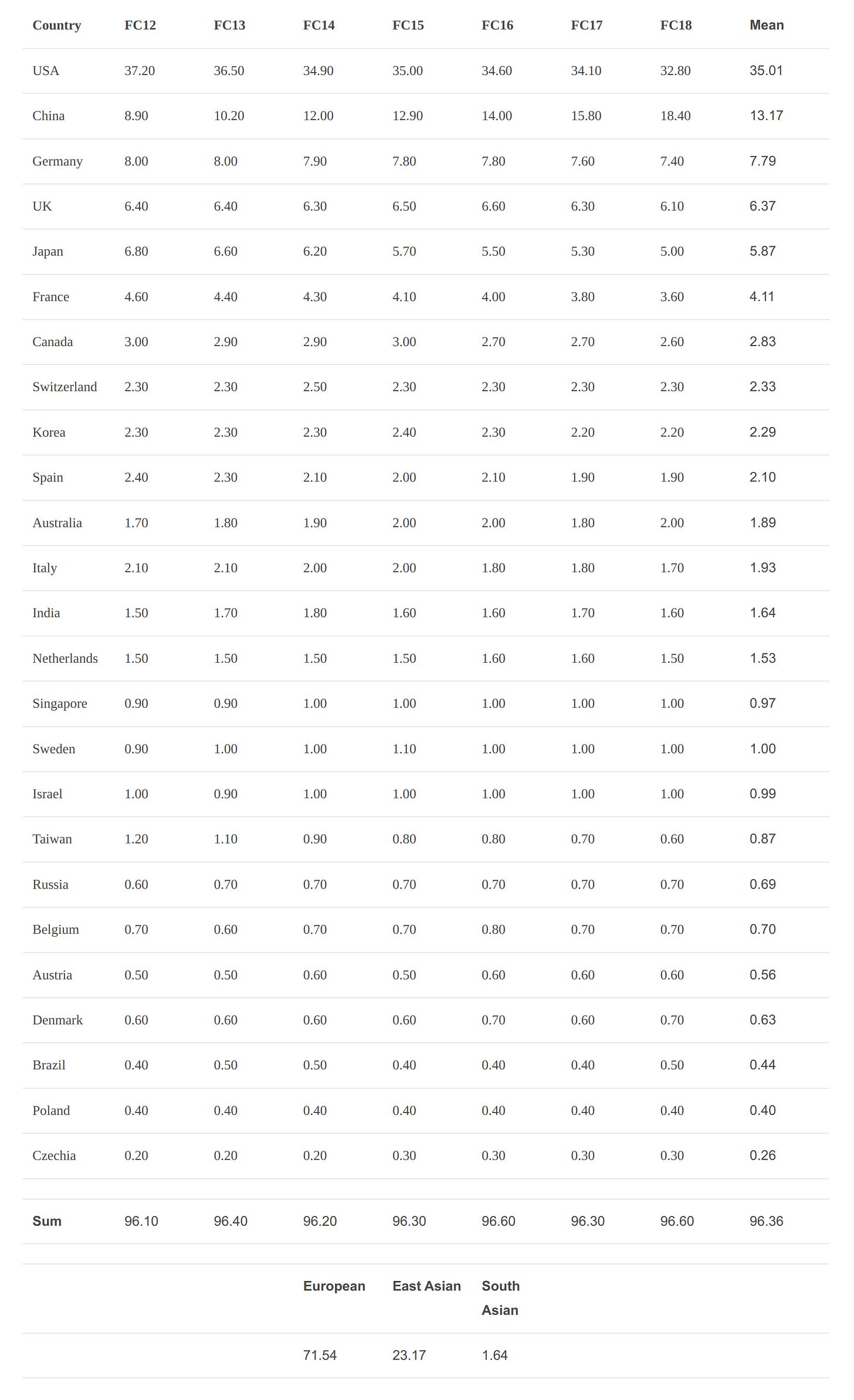

I manually coded these by googling them, and if not helpful, then relying on names. I don’t know any of ancestry/name based count of scientific productivity, but if we use the Nature index that Anatoly Karlin wrote about recently, and do a crude count (meaning I allocate Euro-dominant countries entirely to European, including Brazil and Israel):

Europeans produce ~72% of 'good science' in 2012-2018, East Asians ~23% and South Asians ~2%, and there is a remainder category of ~4%. Relative to the top list, Europeans produce more science than top science cheaters, and vice versa for the other groups.

Ataie-Ashtiani, B. (2018). World map of scientific misconduct. Science and engineering ethics, 24(5), 1653-1656.

A comparative world map of scientific misconduct reveals that countries with the most rapid growth in scientific publications also have the highest retraction rate. To avoid polluting the scientific record further, these nations must urgently commit to enforcing research integrity among their academic communities.

They give us a nice table, and I did the same kind of crude calculations (grouping Latin Americans as Europeans, under assumptions people who do science there are European ancestry mostly):

A staggering ratio for East Asians.

Fanelli, D., Costas, R., Fang, F. C., Casadevall, A., & Bik, E. M. (2019). Testing hypotheses on risk factors for scientific misconduct via matched-control analysis of papers containing problematic image duplications. Science and engineering ethics, 25(3), 771-789.

It is commonly hypothesized that scientists are more likely to engage in data falsification and fabrication when they are subject to pressures to publish, when they are not restrained by forms of social control, when they work in countries lacking policies to tackle scientific misconduct, and when they are male. Evidence to test these hypotheses, however, is inconclusive due to the difficulties of obtaining unbiased data. Here we report a pre-registered test of these four hypotheses, conducted on papers that were identified in a previous study as containing problematic image duplications through a systematic screening of the journal PLoS ONE. Image duplications were classified into three categories based on their complexity, with category 1 being most likely to reflect unintentional error and category 3 being most likely to reflect intentional fabrication. We tested multiple parameters connected to the hypotheses above with a matched-control paradigm, by collecting two controls for each paper containing duplications. Category 1 duplications were mostly not associated with any of the parameters tested, as was predicted based on the assumption that these duplications were mostly not due to misconduct. Categories 2 and 3, however, exhibited numerous statistically significant associations. Results of univariable and multivariable analyses support the hypotheses that academic culture, peer control, cash-based publication incentives and national misconduct policies might affect scientific integrity. No clear support was found for the “pressures to publish” hypothesis. Female authors were found to be equally likely to publish duplicated images compared to males. Country-level parameters generally exhibited stronger effects than individual-level parameters, because developing countries were significantly more likely to produce problematic image duplications. This suggests that promoting good research practices in all countries should be a priority for the international research integrity agenda.

Which produced this (low quality) figure:

Data are pretty noisy, but of the ones with p<.05, we see Germany and Japan below USA, Argentina, Belgium, India, China and other above. Don't know what is up with Belgium here, but otherwise, the results aren't so surprising.

All in all, Hajnal pattern applies. Everybody cheats, but people outside Hajnal cheat a lot more. There are more data out there, a lot of it private. A friend of mine works with foreign applications for people who want to study in the UK (scholarships). People send in essays and the like English test scores (TOEFL etc.). The agencies screen the essays for plagiarism, so one gets a per capita rate of plagiarism. If screened OK, they are given an interview in English. Often, people with perfect essays can't seem to talk English very well, which is generally because they hired someone to write their essays for them, which is not detectable by plagiarism testing but results in massive discrepancies between English written and spoken ability. One can also look up various cheating scandals, as summarized by Those Who Can See.

Thai heist movie, except the goal is to cheat in tests, not steal money from banks.

Edited to add in 2021-07-27 New study confirms what we know:

Carlisle, J. B. (2021). False individual patient data and zombie randomised controlled trials submitted to Anaesthesia. Anaesthesia, 76(4), 472-479.

Concerned that studies contain false data, I analysed the baseline summary data of randomised controlled trials when they were submitted to Anaesthesia from February 2017 to March 2020. I categorised trials with false data as ‘zombie’ if I thought that the trial was fatally flawed. I analysed 526 submitted trials: 73 (14%) had false data and 43 (8%) I categorised zombie. Individual patient data increased detection of false data and categorisation of trials as zombie compared with trials without individual patient data: 67/153 (44%) false vs. 6/373 (2%) false; and 40/153 (26%) zombie vs. 3/373 (1%) zombie, respectively. The analysis of individual patient data was independently associated with false data (odds ratio (95% credible interval) 47 (17–144); p = 1.3 × 10−12) and zombie trials (odds ratio (95% credible interval) 79 (19–384); p = 5.6 × 10−9). Authors from five countries submitted the majority of trials: China 96 (18%); South Korea 87 (17%); India 44 (8%); Japan 35 (7%); and Egypt 32 (6%). I identified trials with false data and in turn categorised trials zombie for: 27/56 (48%) and 20/56 (36%) Chinese trials; 7/22 (32%) and 1/22 (5%) South Korean trials; 8/13 (62%) and 6/13 (46%) Indian trials; 2/11 (18%) and 2/11 (18%) Japanese trials; and 9/10 (90%) and 7/10 (70%) Egyptian trials, respectively. The review of individual patient data of submitted randomised controlled trials revealed false data in 44%. I think journals should assume that all submitted papers are potentially flawed and editors should review individual patient data before publishing randomised controlled trials.

Table of results in detail:

Difficult data presentation, let's re-do it:

Here of course we take a guess at the guilty people in the Western countries are likely of foreign origin. The studies aren't named, so I didn't check (there is an appendix that lists all studies, but no names or links). The Swiss are obviously not known for being dishonest.

Fuck you Diederik Stapel, you put the Netherlands to shame.

The funny thing is that Ataie-Ashtiani, who himself has presented relatively good data on global scientific fraud, is an Iranian.

It's like a African person giving you African crime data.